This result uses S to eliminate the winding numbers in the statement of the argument principle, of course. I should mention here, in correct order, another consequence of the proof of the argument principle.

December 13

Our last class: students had tears. It was sad, sort of. Maybe.

I restated the Schwarz Lemma, declaring again that the proof was easy

but also not easy (this doesn't really help, but it does reflect my

feelings about the proof). I remarked that the Schwarz Lemma allowed

us to solve a rather general extreme value problem:

If U and V are

open subsets of the complex plane, and if a (respectively, b) are

fixed points in U (respectively, V), we could wonder how much a

holomorphic mapping from U to V taking a to b distorts Euclidean

geometry. That is, if f:U-->V is holomorphic, with f(a)=b, then f

"respects" conformal geometry. But what about the usual distance? If z

is in U, what is the sup of |f(z)-b|? Or, if we are interested in

infinitesimal distance, how large can |f´(a)| be? And what

sort of functions, if any, actually achieve these sups?

The general scheme is to try to find biholomorhic mappings from D(0,1) to U and to V, taking 0 to a (respectively, b). Then composition changes the U, V and a, b problem to the hypotheses of the Schwarz Lemma. The composition then gives bounds on both |b-f(z)| and |f´(a)|. The functions which achieve these bounds are those which come from rotations. Problems 6 and 7 of the recent homework assignment illustrate this method.

I remarked that the family of mappings, defined for each a in D(0,1), sending z-->(z-a)/(1-conjugate(a)z, has already been introduced in this course. These are linear fractional transformations which send D(0,1) into itself and send a to 0. Thus the group of holomorphic automorphisms of D(0,1) is transitive. We can write all automorphisms if I understand the stabilizer of a point. I choose to "understand" those automorphisms f of D(0,1) which fix 0. By the Schwarz Lemma, these maps have |f'(0)|<=1. But the inverse of f is also an automorphism, and therefore 1=<|f'(0)|. So this means |f'(0)|=1, and therefore f(z)=ei thetaz, a rotation, again using the Schwarz Lemma. So we now know that all holomorphic automorphisms of the unit disc are ei theta[(z-a)/(1-conjugate(a)z]. Using this we can answer questions such as: what is the orbit of z0, a non-zero element of D(0,1) (a circle centered at 0). I also mentioned an interesting geometric duality: D(0,1) is biholomorphic to H, the open upper halfplane of C. In D(0,1) the stabilizer of 0 is a subgroup of the biholomorphic automorphisms which is easy to describe: it is just the rotations, as we have seen. The transitive part of these mappings is not too easy to understand. In H, the transitive aspect of the automorphisms is nice. I can multiply by positive real numbers, and I can translate by reals. A combination of both of these can move i to any point of H. This double picture appears in many other situations.

| Set | The group of holomorphic automorphisms |

|---|---|

| D(0,1), the unit disc | Linear fractional transformations of the form ei theta[(z-a)/(1-conjugate(a)z]. |

| C, the whole complex plane | Affine maps: z-->az+b where a and b are complex, and a is non-zero |

| CP1, the whole Riemann sphere | All linear fractional transformations |

How do we prove the result for C? Well, if f is a holomorphic automorphism of C, then f(1/z) has an isolated singularity at 0 (this is the same as looking at the isolated singularity of infinity of f(z), of course). Is the singularity removable? If it is, then a neighborhood of infinity is mapped by f itself to a neighborhood of some w. But that w is already in the range of f, so there is q with f(q)=w, and f maps a neighborhood of q to a neighborhood of w. Pursuit of this alternative shows that f cannot be 1-to-1, which is unacceptable. If f(1/z) has an essential singularity, then Casorati-Weierstrass shows also that f itself cannot be 1-to-1. Thus f(z) must have a pole at infinity. This pole should have order 1 or again we get a contradition.

The result for CP1 uses the result for C. The

CP1 automorphism group certainly includes the linear

fractional transformations. This group is (at least) transitive, so we

can ask what the stabilizer subgroup of infinity is: that's az+b. And

moving a point to infinity gives us all of LF(C)

Knowing the automorphism groups is really nice because of the

following major result, which includes the Riemann Mapping Theorem:

The classical uniformization theorem

Any connected simply connected Riemann surface is biholomorphic with

D(0,1) or C or CP1.

This result is difficult to prove. The Riemann Mapping Theorem asserts that a simply connected open proper subset of C is biholomorphic with D(0,1). The uniformization theorem implies that the universal covering surface of any (connected) Riemann surface is one of D(0,1) or C or CP1 and therefore we can study "all" Riemann surfaces by looking at subgroups of the Fundamental Group. For more information about this please take the course Professor Ferry will offer next semester.

I then discussed and proved a version of the Schwarz Reflection Principle. I showed how this could be used to analyze the automorphisms of an annulus, and how it could be used to see when two annuli were biholomorphic. I will write more about this when I don't need to rush off and give a final exam. Finally, Ms. Zhang applied the Schwarz Reflection Principle to verify a famous result of Hans Lewy, that there is a very simple linear partial differential equation in three variables with no solution. For further information about this, please link to the last lecture here.

December 8

It was a rapid day in Math 503, as the instructor tried desperately to teach everything he should long ago have discussed.

First, I wanted to simplify the argument principle so that I could use it more easily. I will make the following assumptions, which I will call S ("S" for "simplifying assumptions")

If I knew (officially) ...

If we accepted the Jordan curve

theorem, then the following facts could be used:

If C is a simple closed curve in the plane (such a curve is a

homeomorphic image of the circle, S1) then

C\C (the complement of C in the plane) has two

components. One component is the unbounded component. If z0

is in that component, then n(C,z0)=0. Now let w be one of

either +1 or -1. This statement is then true for one of the values:

for all z0's in the other component, n(C,z0)=w

(the same w for all z0's in this other component). w=+1 if

C goes around its inside the "correct" way, and w=-1 if it is

reversed.

Certainly this would simplify S. In fact, for most applications, the curve is

usually a circle or the boundary of a rectangular region, and

the topological part of S is

easy to check.

Theorem Assume S. Then

![]() C[f´(z)/f(z)] dz=the number of zeros of f

which lie inside C (where the zeros are counted with

multiplicity).

C[f´(z)/f(z)] dz=the number of zeros of f

which lie inside C (where the zeros are counted with

multiplicity).

This result uses S to

eliminate the winding numbers in the statement of the argument

principle, of course. I should mention here, in correct order, another

consequence of the proof of the argument principle.

Suppose g(z) is also holomorphic in U. What do I know about the

possible singularities of g(z)[f´(z)/f(z)]? Remember that

[f´(z)/f(z)] has a singularity only at z0 which is a

zero of f(z), and there it looks locally like

k/(z-z0)+holomorphic. This is a simple pole with residue

k. Now multiplying by g(z) doesn't create more singularities. It

merely adjusts those of [f´(z)/f(z)]. If g(z) has a 0 at

z0 then g(z)[f´(z)/f(z)] is holomorphic at

z0. Otherwise, g(z)[f´(z)/f(z)] still has a simple

pole at z0 with residue kg(z0. Therefore the

Residue Theorem applies:

Theorem Assume S and

that g(z) is holomorphic in U. Then ![]() C[f´(z)/f(z)] dz=SUMz is a

zero of f inside C, counted with multiplicityg(z).

C[f´(z)/f(z)] dz=SUMz is a

zero of f inside C, counted with multiplicityg(z).

Notice that if z0 is a 0 of f inside C and

g(z0)=0, then both sides of this formula happen to

contribute 0 to the integral (left) and to the sum (right).

I have most often seen this used when g(z)=zk with

k a positive integer, and C some "large" curve enclosing all of the

zeros of f. The result is then the sum of the kth powers

of the roots of f, which can be useful and interesting. For example,

when one is considering symmetric functions of the roots, these

sums are important.

Rouché's Theorem Assume S. Suppose additionally that g(z)

is holomorphic in U, and that for z on C,

|g(z)|<|f(z)|. Then the number of zeros of f inside C is

the same as the number of zeros of f+g inside C.

Proof: Suppose t is a real number in the interval [0,1].

Then f(z)+tg(z) has the following property:

If z is on C,

|f(z)+tg(z)|>=|f(z)|-|tg(z)|=|f(z)|-t|g(z)|>=|f(z)|-|g(z)|>0.

Therefore f(z)+tg(z) is never 0 for z on C (both f(z) and f(z)+g(z)

can't have roots on C), and the function

(t,z)-->f(z)+tg(z) is jointly continuous on [0,1]xU

and holomorphic in the second variable, and never 0 when the

second variable is on C. Thus

the integral

![]() C[{f(z)+tg(z)}´/{f(z)+tg(z)}] dz is

a continuous function of t. But for each t, by the argument principle,

this is an integer. A continuous integer-value function on [0,1]

(connected!) is constant. Comparing t=0 (which counts the roots of f)

and t=1 (which counts the roots of f+g), we get the result.

C[{f(z)+tg(z)}´/{f(z)+tg(z)}] dz is

a continuous function of t. But for each t, by the argument principle,

this is an integer. A continuous integer-value function on [0,1]

(connected!) is constant. Comparing t=0 (which counts the roots of f)

and t=1 (which counts the roots of f+g), we get the result.

Comment The instructor tried to describe an extended metaphor: this resembled walking a dog around a lamppost with the length of the leash between the dog and person less than the distance of the person to the lamppost. Students were wildly enthusiastic about this metaphor. (There may be a sign error in that sentence.) The metaphor is discussed here and here and here. The pictures are wonderful. You can print out postscript of the whole "lecture" by going here and printing out the "first lecture".

Berkeley example #1

Consider the polynomial

z5+z3+5z2+2. The question is: how

many roots does this polynomial have in the annulus defined by

1<|z|<2? I remarked that we would apply Rouché's Theorem

to |z|=1 and to |z|=2. The difficulty might be in deciding

which part of the polynomial is f(z), "BIG", and which is g(z),

"LITTLE".

C is |z|=1 Here let f(z)=5z2. Then |f(z)|=5 on C,

and

|g(z)|=|z5+z3+2|<=|z|5+|z|3+2=4

on C. Since 4<5, and f(z) has 2 zeros inside C (at 0, multiplicity

2), we know that f(z)+g(z), our polynomial, has 2 zeros inside C.

C is |z|=2 Here let f(z)=z5. Then |f(z)|=32 on C,

and

|g(z)|=|z3+5z2+2|<=|z|3+5|z|2+2=8+20+2=30

on C. Since 30<32, and f(z) has 5 zeros inside C (at 0, multiplicity

5), we know that f(z)+g(z), our polynomial, has 5 zeros inside C.

Therefore the polynomial has 3=5-2 zeros on the annulus.

Comments First, as we already noted, the Rouché

hypothesis |g(z)|<|f(z)| does prevent any zeros from sitting

"on" C. That's nice. A more interesting observation is that

this verification of the crucial hypothesis "|g(z)|<|f(z)| for

all z on C" was actually implied by a much stronger statement:

that the sup of |g(z)| on C was less than the inf of |f(z)| on C.

This stronger statement is usually easier to prove than the pointwise

statement in many simple examples. But those who are wise enough

to do analysis may well have situations where the pointwise

estimate may be true without the uniform inequality being correct.

Berkeley example #2

Consider 3z100+ez. How many zeros does this

function have inside the unit circle? Here on C, |z|=1. Thus

|3z100|=3 on C, and

|ez|=eRe z<=e on C. Since e<3, the

hypotheses of Rouch&ecute;'s Theorem are satisfied (with

BIG=3z100 and LITTLE=ez). Since 3z100

has a zero at 0 with multiplicity 100, I bet that

3z100+ez has 100 zeros inside the unit

disc.

The Berkeley problem went on to ask: are these zeros simple? For those

who care to count, this is asking for the cardinality of the set of

solutions to 3z100+ez=0 inside the unit disc. A

zero is not simple if it is a zero of both the function and the

derivative of the function. So we should consider the system of

equations:

3z100+ez=0

300z99+ez=0

The solutions to these (subtract

and factor!) are z=0 and z=100. 100 is outside the unit disc. And

notice that z=0 is not a solution of the system (of either

equation, and it should be a solution of both!). Thus

3z100+ez=0 has 100 simple solutions (each has

multiplicity 1) inside the unit disc!

| I tried to explore how roots of a polynomial would change when the polynomial is perturbed. If p(z) is a polynomial of degree n (n>0) then we know (Fundamental Theorem of Algebra) that p(z) has n roots, counted with multiplicity. Now look at the picture. The smallest dots represent roots of multiplicity 1, the next size up, multiplicity 2, and the largest dot, multiplicity 3. (I guess, computing rapidly, that n=11 to generate this picture.) Now suppose that q(z) is another polynomial of degree at most n, and q(z) is very small in some sense. The only sense I won't allow is to have q(z) uniformly small in modulus in all of C (because then [Liouville] q(z) would be constant). |  |

| Where are the roots of p(z)+q(z)? In fact, what seems to happen is that when q(z) is very small, the roots don't wander too far away from the roots of p(z). Things can be complicated. The roots of multiplicity 2 could possibly "split" (one does, in this diagram) or the root of multiplicity 3 could split completely. How can we prove that this sort of thing happens? |  |

| Well, suppose we surround the roots of p(z) by "small" circles. Since all the roots of p(z) are inside the circles, |p(z)| is non-zero on these circles, and (finite number of circles, compactness!) inf|p(z)| when z is on any of these circles is some positive number, m. Now let q(z) be small. I mean explicitly let's adjust the coefficients of q(z) so that |q(z)| has sup on the set of circles less than m. Then the critical hypothesis of Rouché's Theorem is satisfied, and indeed, the roots of p(z)+q(z) are contained still inside each circle, exactly as drawn. |  |

Certainly one can make this more precise. But I won't because I am in such a hurry. But I will remark on this: if we consider the mapping from Cn+1 to polynomials of degree<=n (an (n+1)-tuple is mapped to the coefficients), then for a dense open subset of Cn+1, the polynomial has n simple roots (consider the resultant of p and its derivative). On this dense open set, it can be proved that the roots are complex analytic functions of the coefficients. An appropriate version of the Inverse Function Theorem proves this result and it is not difficult but isn't part of this rapidly vanishing course.

I the stated

Hurwitz's Theorem Suppose U is a connected open subset of

C, and {fn} is a sequence of holomorphic

functions converging to the function f uniformly on compact subsets of

U. If each of the fn's is 1-to-1, then ...

STOP STOP STOP!!!

Look at some examples. If U is the unit disc, consider

fn(z)=(1/n)z. Then these fn's do converge

u.c.c. to the function f(z)=0 (for all z). Hmmmm ... But if

fn(z)=z+(1/n) in the unit disc, these fn's

converge u.c.c. to z. These two behaviors are the only possible

alternatives. O.k., let's go back to the formality of the math course:

Hurwitz's Theorem Suppose U is a connected open subset of

C, and {fn} is a sequence of holomorphic

functions converging to the function f uniformly on compact subsets of

U. If each of the fn's is 1-to-1, then either f is 1-to-1

or f is constant.

Comment In other words, either the darn limit function is quite

nice, or the limit function collapses entirely. In practice, it is

usually not that hard to decide which alternative occurs.

Proof Well, suppose f is not constant. I'll try to show

that f is 1-to-1. If f(z1)=f(z2)=w (where the

zj's azre distinct), then I will look at

U\f-1(w). Since f is not constant and U is connected, we

know that (discreteness!) U\f-1(w) is a connected open

set. It is pathwise connected. Use this fact to connect z1

and z2 by a curve which (other than its endpoints!) does

not pass through f-1(w). Inside this curve, f(z)-w has two

roots. But then fn(z)-w has two roots for n very large

(since the curve is a compact set, etc.). But this conclusion

contradicts the hypothesis that fn is 1-to-1. So ... we are

done.

For example, Hurwitz's Theorem plays an important role in many proofs of the Riemann Mapping Theorem, where the candidate for the "Riemann mapping" is a limit of some sequence of functions, and then (because, say, the limit candidate has derivative not 0 at a point) the limit must be 1-to-1. But we have no time for this. (A famous mathematic quote [or pseudoquote, because it is exaggerated] is "I have not time; I have not time" ... here is a good reference.)

What follows is a remarkable result which I should have

presented when I discussed the Maximum Modulus Principle.

Schwarz Lemma Suppose f is holomorphic and maps D(0,1) to

D(0,1). Also suppose f(0)=0. Then

|f´(0)|<=1, and for all

z in D(0,1), |f(z)|<=|z|.

If also either |f´(0)|=1 or

there is a non-zero z0 in D(0,1) so that

|f(z0)|=|z0|, then f is a rotation: there is

theta so that f(z)=ei thetaz for all z in D(0,1).

Proof The proof has several notable tricks which I am

sure I could not invent. First, "consider" g(z)=f(z)/z. This is fine

away from 0. But, actually, since f(0) is assumed to be 0, g has a

removable singularity at 0. Hey! Wow!! What should g(0) be? The limit

of f(z)/z as z-->0 (remembering that g(0)=0!) is actually f´(0).

So I will remove g's singularity at 0 by defining g(0) to be

f´(0). O.k., and now we "work":

First notice that since g is holomorphic, by Max Mod, the sup of g on

the set where |z|<=s occurs where |z|=s. So if |z|<=r and

r<s, then |g(z)|<=|g(somewhere that |z|=s)|={|f(that z)/that z|}=1/s for s>=r

and s<1. 1/s for r<s<1 has least upper bound

1. Therefore

|g(z)|<=1 for all z in D(0,1). This establishes that claim that

|f´(0)|<=1, and for all

z in D(0,1), |f(z)|<=|z|.

If either of these inequalities is an equality, then by Max Mod, the g

function must be a constant (surely a constant with modulus equal to

1). Since g is a constant with modulus 1, f must be z multiplied by a

constant of modulus 1, and this is a rotation.

That's the story. but I should remark that this proof, very short, very simple, seems (to me!) to be also very subtle. I have read the proof and read the proof and ... I was a coauthor on a paper which proved a version of Schwarz's lemma for Hilbert space, and used the lemma to verify some neat things, and I still am not entirely confident I understand the proof. Oh well. Next time I hope to give some very simple consequences.

I claimed that this proof was correct, or at least more correct than the one I offered in class. Mr. Matchett read it and found two misprints. Oh well.

December 6

Mr. Peters kindly discussed a wonderful sum of Gauss which can be evaluated using residues. Mr. Trainor kindly discussed a method using the Residue Theorem which can evaluate exactly (in terms of "familiar" constants) such sums as the reciprocals of the even integer powers. Here, are fourteen proofs of the Pi2/6 fact written by Robin Chapman.

I first advertised Rouché's Theorem, which I asserted allows us to hope that roots don't wander too far when their parent polynomials change a bit. This would be proved from the Argument Principle.

Suppose f is meromorphic in an open subset U of C. I showed that for z0 in U, f'(z)/f(z) is k/(z-z0)+h´'(z)/h(z) where h(z) is a non-zero holomorphic function near z0. Using this I was able to state a version of the argument principle, having to do with the line integral of f´(z)/f(z) in U over a closed curve which did not pass through any poles or zeros of f and which was nullhomotopic in U. This integral can also be interpreted as the net change of the arg(f(z)) as z travels around the closed curve: the net number of times the curve's image wraps around the origin. This is, I asserted, the beginning of degree theory.

I will apply this to prove Rouché's Theorem next time, at which time I also hope to announce the real time of the final exam. Sigh.

Final exam

We discussed the Final Exam some more. This exam will be approximately

as long and difficult as the midterm. The method of the exam will be

the same. The exam will be given, I hope, on Friday, December 17, at

1:10, in SEC 220.

December 1

I did the last two integrals. I found the residue of something with a double pole. And I completely correctly computed the last integral, a result of Euler's. I used an interesting contour. I tried to do the problem as I imagine a good engineering student might do it (a really good student would use Maple or Mathematica!). As I mentioned in class, the full rigorous details of this integral are shown on three pages of Conway's text.

I just tried, very briefly, to do the last integral as I imagined

Euler might have done, the way a physics person might (ignoring all

that stuff about limits, integrals, differentiation, etc.). So I set

F(c)=![]() 0infinity[x-c/(1+x)]dx=Pi/sin(Pi c)

and then I computed F(1/2) (this was easy to do explicitly using

elementary calculus). Then I tried to compute F´(c). My desire

was to get an ordinary differential equation for F. I was unable to do

this. Maybe someone can help me. Maybe I should instead have looked

for ome other kind of equation for F(c).

0infinity[x-c/(1+x)]dx=Pi/sin(Pi c)

and then I computed F(1/2) (this was easy to do explicitly using

elementary calculus). Then I tried to compute F´(c). My desire

was to get an ordinary differential equation for F. I was unable to do

this. Maybe someone can help me. Maybe I should instead have looked

for ome other kind of equation for F(c).

I derived yet another version of the Cauchy Integral Formula (the

final version for this course, assuming that C is a closed

curve in an open set U, f is holomorphic in U, and z is in U but not

on C. Then ![]() Cf(w)/(w-z) dw=n(C,z)f(z). This is a direct

consequence of the Residue Theorem, recognizing that the function

f(w)/(w-z) (a function of w) is holomorphic in U\{z}, and has a simple

pole at z with residue equal to f(z).

Cf(w)/(w-z) dw=n(C,z)f(z). This is a direct

consequence of the Residue Theorem, recognizing that the function

f(w)/(w-z) (a function of w) is holomorphic in U\{z}, and has a simple

pole at z with residue equal to f(z).

Then I tried to verify the beginning of the Lagrange

inversion theorem, sometimes called the Lagrange

reversion theorem. I first asked what we could say about

f:U-->C if f were 1-to-1. After Mr. Matchett told me that I seemed to be from

Mars, I realized that I had forgotten to indicate that f was

holomorphic. Now we could say something. But first I gave a real

example ("real" refers here to the real numbers):

If f(x)=x3 then f is certainly smooth (real analytic!) and

1-to-1. The inverse map is not smooth at 0, of course.

Can this occur in the "holomorphic category"? Well, no: if f is

locally 1-to-1, then f' is not 0, so (setting g equal to the inverse

of f) g'(w)=1/f'(z) where f(z)=w. And g is holomorphic.

Then after much confusion and correction by students, I tried to compute the integral of sf´(s)/(f(s)-w) over a small circle centered at z. The Residue Theorem applies. The integrand is 0 only when f(s)=w. By hypothesis, this only occurs when s is z. The applicable winding number is 0. The pole at z is simple since f´ is never 0. Thus the residue is a residue at a simple pole. Here we need the limit of (s-z)[sf´(s)/(f(s)-w)] as s-->z. But we can recognize (s-z)/(f(s)-w) as the difference quotient upside-down. So the limit is 1/f´(z), and the limit of (s-z)[sf´(s)/(f(s)-w)] as s-->z is just z, which is g(w).

Therefore for sufficiently small r, ![]() |w-z|=r [sf´(s)/(f(s)-w)] dw=g(w) if

f(z)=w.

|w-z|=r [sf´(s)/(f(s)-w)] dw=g(w) if

f(z)=w.

The significance of this result whose consequences applied to power series are frequently used in combinatorics, is that the operations on the integral side (the left-hand side above) are all involving f, while the right is just g.

I hope on Monday to cover Rouché's Theorem after Mr.Peters and Mr. Trainor, magnificent heros, speak.

Monday, November 29

| Volunteers wanted! |

|---|

Since I am tired, I would like two (2) volunteers to each prepare

15 minutes worth of Wednesday's lecture.

|

I restated the Residue Theorem and discussed again some idea of the proof. The residue of a function f(z) at an isolated singularity located at p is the constant a so that f(z)-a/(z-p) has a primitive in D(p,epsilon)\{p}. So the residue measures the obstacle to having a primitive.

The remainder of the (so-called) lecture was devoted to computational examples.

Monday, November 22

I went over Cauchy's Theorem again (no, I won't let it go, since it is a principal result of the subject). Again, I asserted the consequence that in a simply connected open subset of C, holomorphic functions which are never 0 have holomorphic logarithms. From this follows the existence of holomorphic nth roots for such functions.

In a connected open set, if a holomorphic function has a log, then it will have exactly a countable number of logs, and these will differ by 2Pi integer. I made a similar remark about the number of different nth roots (if at least one such function existed).

Increasing Mr. Trainor's

disappointments

In addition to the possible failure of log mapping complex

multiplication to complex addition (mentioned last time) I

defined AB to be eBlog(A). It then turns out

that (AB)C is hardly ever equal to

ABC. I think you may run into trouble if you try A=B=C=i,

for example.

log i is i(Pi/2), so ii is

e(i(Pi/2)i)=e-Pi/2. Now

(e-Pi/2)i is ei(-Pi/2)=-i. But

ii2=i-1=-i. Darn! It seems they are

the same! Please find an example where they are different.

A child's version of the Residue Theorem

We start with a function f holomorphic in D(0,1)\{0}. And a closed

curve C in D(0,1)\{0}. What can we say about

![]() Cf(z) dz?

Cf(z) dz?

Since f is holomorphic in A(0,0,1), I can write a Laurent series for

f:

f(z)=SUMn=-infinityinfinityanzn.

Now let

g(z)=SUMn=-infinityinfinityanzn-a-1/z.

Then g(z) has a primitive in D(0,1)\{0}:

G(z)=SUMn=-infinityinfinity(an/(n+1))zn+1

(n NOT equal to -1!). This is because we proved we can integrate

term-by-term in the series (except for n=-1). So

![]() Cf(z) dz=

Cf(z) dz=![]() Ca-1/z dz. The number

a-1 is called the residue of f at 0. What about

Ca-1/z dz. The number

a-1 is called the residue of f at 0. What about

![]() C1/z dz. This is called the winding

number of C about 0, n(C,0).

C1/z dz. This is called the winding

number of C about 0, n(C,0).

What values can

![]() C1/z dz have?

C1/z dz have?

If we go back to our definition of the integral (selecting discs,

primitives, etc., then we see that ![]() C1/z dz will be

SUMj=1nlogj(C(tj))-logj(C(tj-1)).

Now logj is a branch of logarithm in

D(zj,rj). The real parts of the logs are all the

same. The imaginary parts differ by at most some multiple of

2Pi i. Since the real parts all cancel cyclically (remember, this

is a closed curve, so

C(a)=C(t0)=C(tn)=C(b), the differences are

all 2Pi i multiples of an integer. Therefore the values of

C1/z dz will be

SUMj=1nlogj(C(tj))-logj(C(tj-1)).

Now logj is a branch of logarithm in

D(zj,rj). The real parts of the logs are all the

same. The imaginary parts differ by at most some multiple of

2Pi i. Since the real parts all cancel cyclically (remember, this

is a closed curve, so

C(a)=C(t0)=C(tn)=C(b), the differences are

all 2Pi i multiples of an integer. Therefore the values of

![]() C1/z dz are just in 2Pi iZ.

C1/z dz are just in 2Pi iZ.

Please note that I gave a rather different impression of this

proof in class. In particular, I seemed almost to insist that I needed

to give a formula for the branch of logarithm in

D(zj,rj). This meant that I needed to give a

formula for argument in that disc. I got into trouble trying to be

precise. In fact, all I really needed was the knowledge that a branch

of log existed, and a complete description of the possible values of

log(z). I knew those things and could use them to complete the proof

as shown above. I didn't need to compute further.

The winding number

Suppose now C:[a,b]-->C is a closed curve, and the complex

number w is not in C([a,b]). Then the winding number of C with

respect to w, n(C,w), is (1/[2Pi i])![]() C(1/[z-w])dz.

C(1/[z-w])dz.

Properties of the winding number

A grownup's version of the Residue Theorem

The hypotheses are a bit long. First, we need an open subset U of C,

and a discrete subset, P, of U with no accumulation point in

U. (Depending on your definition of "discrete in U", the last

requirement may not be needed, but I'd like to specify it anyway.)

Now we need f, a function holomorphic in U\P. And we need a closed

curve C defined on [a,b] so that C([a,b]) is contained in U\P, and so

that C is nullhomotopic in U (homotopic to a constant through closed

curves in U).

The conclusion evaluates ![]() Cf(z) dz. It equals 2Pi i multiplied by the

SUMw in P n(C,w)res(f,w).

Cf(z) dz. It equals 2Pi i multiplied by the

SUMw in P n(C,w)res(f,w).

Note The residue of f at w, res(f,w), is the coefficient

of 1/(z-w) in the Laurent series of f in a tiny deleted disc at w:

D(w,epsilon)-{w}.

So this theorem turns out to be a very powerful result, with many applications both in theory and in practical computation. But it isn't even clear that the sum described in the theorem is finite! But it is. Since C is nullhomotopic, there is a homotopy, H:{a,b]x[0,1]-->U which is a continuous map so that H(_,0)=C and H(_,1) is a point. Let K=H({a,b]x[0,1]). Since H is continuous, K is a compact subset of U. Now P has only finitely many points in K. If w is not in K, then n(C,w)=0 since n(H(_,1),w)=0 and we use the last remark about winding numbers above. So the sum is indeed finite. (I think this is tricky: we don't need the Jordan curve theorem or anything, just some cleverness!).

Proof Suppose wj is the list of all w's which are

both in P and in K, for j=1,...n (a

finite set!). Let Sj(z) be the sum of the negative terms in

the Laurent series of f at wj. From the theory of Laurent

series, we know that this series converges absolutely and uniformly on

compact subsets of C\{wj}, and so is

holomorphic on C\{wj}. The difference

f-SUMj=1nSj has removable

singularities in K: consider them removed. Now by Cauchy's Theorem,

![]() Cf(z)-SUMj=1nSj(z) dz

has integral 0. Therefore we only need to compute the integral of

Sj(z) over C to complete our proof. But, just as in the

child's version above, the "higher" terms (that is,

(z-w)-(integer>1)) integrate to 0 since they have

primitives in C\{wj}. The only term that

remains gives us exactly

2Pi i n(C,wj)ref(f,wj). And we're

done.

Cf(z)-SUMj=1nSj(z) dz

has integral 0. Therefore we only need to compute the integral of

Sj(z) over C to complete our proof. But, just as in the

child's version above, the "higher" terms (that is,

(z-w)-(integer>1)) integrate to 0 since they have

primitives in C\{wj}. The only term that

remains gives us exactly

2Pi i n(C,wj)ref(f,wj). And we're

done.

I'll spend the next week giving applications (computation of definite integrals) and proving powerful corollaries (Rouché's Theorem) of the Residue Theorem.

Wednesday, November 17

| Today's vocabulary |

|---|

|

recondite 1. (of a subject or knowledge) abstruse; out of the way; little known. 2. (of an author or style) dealing in abstruse knowledge or allusions; obscure.

abstruse

prolix

trenchant

incisive

méchant (French) |

We deduced various forms of Cauchy's Theorem (I believe I wrote three of them). To do this, I remarked that if the image of a closed curve was inside an open disc, then the integral of a holomorphic function around that closed curve is 0 (a consequence of our method last time: we can take one open disc for the collection of discs, and one primitive, and as partition, just the endpoints of the interval. Then the the sum is 0.) (Proof suggested by Mr. Nguyen.)

Now I used something (uniform continuity, compactness, Lebesgue

number, stuff) to see the following: if H:{a,b]x[0,1]-->U is

continuous (for example, a homotopy!) then there are integers m and n

and a collection of open discs, D(zjk,rjk) for

1=<j<=n and 1=<k<=m, in U so that (with

tj=a+j(b-a)/n and skk=k/m)

H([tj-1,tj]x[sk-1,sk])

is contained in D(zjk,rjk). This is so darn

complicated! Well, it means that we can break up the rectangle

{a,b]x[0,1] into little blocks so that the image of each little

block fits inside an open disc contained in U. But then the integral

of f(z) dz (for f analytic in U) over the image of the boundary

of that block must be 0 (using Mr. Nguyen's fact). And it is easy (?!) to

see that the sum of over all the blocks shows that, for closed curves

S and T with images in U, homotopic through closed curves, gives

![]() Sf(z) dz=

Sf(z) dz=![]() Tf(z) dz. And this was

one of the three forms of Cauchy's Theorem I wrote.

Tf(z) dz. And this was

one of the three forms of Cauchy's Theorem I wrote.

An open subset of U is simply connected if every closed curve is homotopic to a constant. Another version of Cauchy's Theorem is that the integral of any holomorphic function around a closed curve in a simply connected open set must be 0. (By the way, it saves worrying about things if we also assume in this discussion that the open sets are connected.).

Now I asked for examples of simply connected sets, and I was given the disc. My homotopy of a closed curve to a point (nullhomotopic) just involved a linear interpolation of the curve to a point, so apparently I only needed that the open set be convex, or even just star-shaped.

Now I asked for an example of an open connected set which was not simply connected. Here we used the annulus, A(0,0,2), as U. We claimed that the boundary of the unit circle was a simple closed curve (true) not homotopic to a point in U. Why? Well, the integral of 1/z around that curve is 2Pi i, not 0. Since 1/z is holomorphic in U, we know that the curve is not nullhomotopic. This is really cute. (I mentioned, which is true but definitely not obvious, that we only need to use 1/(z-a) to "test" for nullhomotopy.)

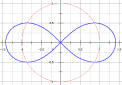

I defined the first homotopy group of a topological space, based at a point, as the quotient of the set of loops through the point modulo the equivalence relation, homotopy, and using as the group operation, addition is "follow one curve by another". There are then lots of details to check. The fundamental group is a topological invariant (two homeomorphic spaces have isomorphic fundamental group). This group can be isomorphic to the identity (true when U is simply connected). Or it can have a whole copy of Z in it (take U=A(0,0,2), using "going around the unit circle n times" and integration to show that none of these curves is homotopic to each other). In fact, the group can be complicated and not obvious. A "twice-punctured plane" (say C\{+/-1}, which is sort of topologically the lemniscate) has Fundamental Group equal to the free product on two generators, and this group is not commutative. This can't be checked by Cauchy's Theorem, since the integral evaluated on a "curve" which is made from commutators will always be 0. This needs a result called Van Kampen's Theorem. So: enough of that stuff (topology).

O If U is simply connected and f is holomorphic in U, then

f has a primitive in U: a function F, holomorphic in U, so that

F´=f.

This is true because we saw that such an F exists exactly when the

integral of f around closed curves in U is 0, and this hypothesis is

fulfilled due to Cauchy's Theorem.

1 If U is simply connected and if f is holomorphic in U and

never vanishes, then f has a holomorphic log: there is g holomorphic

in U with eg=f.

By reasoning backwards (we did this before) we know how to walk

forwards. So: consider k=f´/f, holomorphic in U since f never

vanishes. Now this function has a primitive, K, and we can consider

G=eK. The function f/G (G can't be 0 since exp is never 0)

has derivative 0 (just compute algorithmically). Since (I hope U is

connected!) f/G is constant, and neither f nor G is zero, f/G is a

non-zero constant. But then there is a constant q so that exp(q)=f/G

and so f=eK-q and we have our holomorphic log.

How many holomorphic logs are there? In fact, if g1 and g2 are holomorphic logs of f on U (a connected U) then eg1-g2=1 and therefore the continuous function g1-g2 takes U to 2Pi(the integers). But the latter set is discrete, so therefore the function g1-g2 must be constant. Thus we see that if a function has a holomorphic log, then it has infinitely many holomorphic logs, and these functions differ by integer multiples of 2Pi i.

An "undergraduate" problem

An "undergraduate" problem

Look at the domain pictured on the right, the domain G (heh, heh,

heh). I claim that this open set is connected, does not include 0, and

is also simply connected. A totally rigorous proof of all of these

statements would need, of course, a really careful description of G,

which I have not given. Well, but "of course" you can see G is

connected (it is arcwise connected: a curve is drawn connecting 1 to 2

in G, and other curves can be drawn to connect any pair of points in

G). And maybe (almost "of course") you can see that G is simply

connected: put a closed curve in G, and you can almost see how to

untwist it (always staying in G!) and get it to shrink to a point (in

G). Now the function z is holomorphic and non-zero in G. So z has a

holomorphic log, L(z). I know therefore that eL(z)=z in

G. But, wait, since we earlier (September 13) explicitly solved

ew=z. Thus we know that L(z) is a complex number whose real

part is ln(|z|) (the "natural log" of z), and its imaginary part is

arg(z), where arg(z) is one of the arguments of z. Now we are allowed

by our previous discussion to specify L(z)'s value at one point (up to

a multiple of 2Pi i. So I will declare that L(1) is 0. Now I ask

what L(2) must be. Well, it is a complex number whose real part is

ln(2). What is its imaginary part? First, an approximate answer: if

you "walk along" the dashed red path from 1 to 2 and pay attention to

arg, and start with arg(1)=0, then you will end up with

arg(2)=2Pi. That's because arg increases, and we travel totally around

0: L(2)=ln(2)+2Pi i. More rigorously, we could assert that we

have a C1 function whose derivative obeys what the

derivatives of arg should be, and integrate the darn thing as the path

goes from 1 to 2. We'll end up with the same increase in arg. So

although we can get a holomorphic log on G, it may not agree very much

with the standard log on G intersect R. Mr. Trainor asked about the implications for

the formula log(ab)"="log(a)+log(b). I put quotes around the equality

because it is not likely to be true, and if you ever want to use it, you

should worry about its validity. Yes, the complex numbers are

wonderful, but their use could also be ... complicated.

As we saw before, if we have a holomorphic log, we then have holomorphic square roots and cube roots and ... we will continue to discuss this next time.

Monday, November 15

Go to the colloquium

when you know that the talk will be comprehensible. And

interesting. Better, bring work with you and sit in the back. Try

diligently to understand for 5 minutes, and then ... do what you would

like to do.

What now?

Preliminary definition (with lack of specificity)

I first tried to define homotopy. Two closed curves S and T in U,

defined on [a,b], are homotopic if there is a map

H:{a,b]x[0,1]-->U so that H(_,0)=S and H(_,1)=T.

Analysis Well, we'd better have H continuous or else we don't

have too much information about the relationship between S and T

(observation contributed by Mr. Nguyen). And, maybe we'd better have

cv=H(_,v) be a closed curve for every v in [0,1] or

unlikely things can happen as I tried to draw in class (for example,

without this condition, a closed curve around 0 in D(0,1)\{0} could be

homotopic to a constant). (Contributed by Mr. Nandi.)

Now I want to proved Cauchy's Theorem, and this should say something

like: if S and T are homotopic closed curves (with a continuous

homotopy through closed curves) and f is holomorphic in U, then the

integral of f around S is equal to the integral of f around T. Well,

what we could do is try to study the function

![]() cvf(z) dz as a function of v (v is

in [0,1]) and see (somehow!) that this function is

constant. Unfortunately, there is a serious technical

difficulty. Although the curves S and T are supposed to be piecewise

C1 curves in our previous definitions, the homotopy H which

gives the curves cv and these curves need not be more than

continuous.

cvf(z) dz as a function of v (v is

in [0,1]) and see (somehow!) that this function is

constant. Unfortunately, there is a serious technical

difficulty. Although the curves S and T are supposed to be piecewise

C1 curves in our previous definitions, the homotopy H which

gives the curves cv and these curves need not be more than

continuous.

Giuseppe Peano did not invent the piano, nor did he invent the integers although he formulated a nice set of axioms for them (but Kronecker stated, "God created the integers, all else is the work of man."). Peano showed that there were continuous surjections between intervals in R and regions in R2. Here is more information. Please note that these maps are not 1-1. But it does seem difficult to imagine that we could integrate f(z) dz over a random continuous curve.

There are various ways to analyze the integral over H(_,v) now. We can use simplicial approximation and get piecewise linear curves, or we can approximate by convolving with a Cinfinity function to get nice smooth curves. I will follow a more primitive method. (There is a joke in that sentence.)

We will compute such integrals if we realize that we can take advantage of the holomorphicity of f(z). In differential geometry language, we will use the fact that f(z) dz is closed and is therefore locally exact to define the integral.

Creation of the "data" used to "integrate"

Suppose S:[a,b]-->U is a continuous map. Then K=S([a,b]) is compact in

U. We can in fact find a partition for [a,b]:

a=t0<t1<...tn-1<tn=b

so that S([tj-1,tj]) is always inside

D(zj,rj) which is an open disc in U. This

follows from compactness and uniform continuity, I think. In each

D(zj,rj) we know that f has a primitive,

Fj, so Fj´(z)=f(z) in

D(zj,rj) (contributed by Mr. Matchett earlier in the course). Then, if

P is the partition and D is the collection of open discs

and F is the collection of primitives, we define

I(f,S,P,D,F) to be

SUMj=1nFj(S(tj))-Fj(S(tj-1)).

I changed notation from S used in class to I here

because I can't write the Greek letters (such as sigma) in html.

Now there are a sequence of observations and lemmas.

|

|

|

| Lemon | Lemming | Lemniscate |

|---|

#1 Consistency with the previous definition

If S is a piecewise C1 curve, then the value of

I(f,S,P,D,F) is the same as

![]() Sf(z) dz.

Sf(z) dz.

We can write the integral as a sum of integrals along the various

peices of the curve, that is, S restricted to

[tj-1,tj]. But in each of those we already saw

that since the curve is inside an open disc, the line integral of f

can be computed by taking the difference

F(S(tj))-F(S(tj-1)) where F is any

antiderivative of f. Therefore the old definition agrees with each

piece of the "new" definition.

#2 The choice of primitives doesn't matter

We can change primitives. Thus if Gj and

Fj are both primitives of f in D(zj,rj),

then the functions differ by a constant. Thus

Fj(S(tj))-Fj(S(tj-1))

and

Gj(S(tj))-Gj(S(tj-1))

agree. Therefore the sum we have defined does not depend on selection

of F.

#3 The choice of discs doesn't matter

We can change discs. That is, suppose we know that

S([tj-1,tj]) is inside both

D(zj,rj) and D(wj,pj) with

corresponding primitives Fj and Gj. Then

S([tj-1,tj]) is contained in the intersection of

the discs. This intersection is an intersection of convex open sets

and is hence convex and open. Therefore the intersection is connected

and open, and the primitives Fj and Gj of f must

again differ by constants. So

Fj(S(tj))-Fj(S(tj-1))=Gj(S(tj))-Gj(S(tj-1))

again and the sum we have defined does not depend on selection

of D.

It is also true that the sum doesn't depend on the partition, but here some strategy (perhaps borrowed from the definition of the Riemann integral) is needed. I'll first "refine" a partition by one additional point.

#4 Adding a point to a partition doesn't change the sum

If P is one partition:

a=t0<t1<...tn-1<tn=b

so that S([tj-1,tj]) is always inside

D(zj,rj) which is an open disc in U, and

Fj is the corresponding primitive, and if w is a point in

[a,b] which is not equal to one of the tj's, then look at

the corresponding data. The only difference is

F*j+1(S(tj))-F*j+1(S(w))+F*j(S(w))-F*j(S(tj-1))

in the partition with w, and

Fj(S(tj))-Fj(S(tj-1))

without w. But we can add and subtract Fj(S(w)) to the

latter sum, and notice that Fj and

F*j are both antiderivatives, again, of the same

function (f) in a connected open set (again, a disc or the

intersection of two discs). And the same is true of the other

"piece". So there is no difference if we make the partition "finer":

add one more point.

#5 The choice of partitions doesn't matter

Two partitions P and Q give the same result for their

corresponding sums. This is because the partition R which is

the union of P and Q is a common refinement, and is

obtained from each of P and Q by adding one point "at a

time". So by the previous result, the sums must be the same.

Lemma Suppose S is a continuous curve from [a,b] to U, and f is

holomorphic in U. Then I(f,S,P,D,F)

does not depend on P or D or F when the choices

are made subject to the restrictions described above. Also, if S is a

piecewise C1 curve, this sum is equal to the integral of f

on S.

Therefore, we extend the definition previously used for piecewise

C1 curves by calling this sum

![]() Sf(z) dz.

Sf(z) dz.

Next time I will formally state a homotopy version of Cauchy's Theorem, and prove it using this lemma.

Return of the exam; discussion

I returned the exam. I emphasized that I wished students to do as well

as possible. For example, it is perfectly permissable to study with

each other, and therefore there is little reason not to do as well as

possible on problems which I have previously shown to you. I also

asserted that I will not give more exam problems on topology. I

have tried.

Wednesday, November 10

Monday, November 8

I talked a bit more (but only a bit more!) about Riemann surfaces. I remarked that:

If S is a compact and connected Riemann surface, then every

holomorphic function on S is a constant.

Why is this? If F:S-->C is holomorphic, then |F| is a continuous

function on S, and hence |F| achieves is maximum, at some point p in

S. But then in a coordinate chart around p, we have a holomorphic

function which has a local maximum in modulus. In that chart, the

function F must therefore (Max Modulus Theorem) be a constant. What

now? We have a function, F, which is constant in some non-empty open

subset of S. We can use connectedness and the identity theorem to

conclude that F is constant on S.

Thus for compact connected Riemann surfaces, S, H(S) is just C. What about M(S)? This is (equivalently) either the functions on S which locally look like (z-p)some integer(non-zero holomorphic function). M(S) is a field, and always contains the complex numbers, so it is a field extension of C. Such fields are called function fields. In the case of CP1, we saw that all rational functions give elements of M(CP1). If f is a meromorphic function on CP1, then the pole set of f is finite (the pole set is discrete and CP1 is compact). We can multiply by an appropriate product of (z-pj)-1's to cancel the poles. So we get a holomorphic function: that is, a constant. Thus every element of M(S) corresponds to a rational function. The field extension of C is a simple transcendental extension by z.

Now I looked at L, the set of Gaussian integers, in C. A Gaussian integer is n+mi where n and m are integers. L is a maximal discrete subgroup. L is certainly an additive subgroup and its elements are discrete. If we include another element of C, the subgroup is either isomorphic to L or it is not discrete in C. Sigh. I think that is what I meant by maximal here.

Anyway, give C/L the quotient topology. Then C/L is a compact topological space. It is homeomorphic to S1xS1, a torus. C/L is also a Riemann surface, with coordinate charts provided by just the identity.

Functions on C/L correspond to functions on C which are doubly-periodic: f(z)=f(z+i)=f(z+1) for all z in C. If such a function is entire, then since its values are all given on the unit square, the function is bounded and thus by Liouville must be constant. So again we have proved that H(T) consists of constant functions. What about M(T)?

Notice that the function z in M(CP1) has a single pole of order 1. Is there such a function in M(T)? If f(z) has a pole at a, then imagine a being in the center of a "period parellelogram" for T: so a is inside the square formed by 0, 1, i, and 1+i. Now f(z)=b/(z-a)+a linear combination of non-negative integer powers of (z-a) (just the Laurent series). We can integrate f over the boundary of the unit square. But we've seen that this integral is 2Pi i b. But the integral over the sides of the square is also 0, since the two horizontal sides cancel because the function is i periodic and the two vertical sides cancel because the function is 1 periodic. Thus b=0. There is no meromorphic function on T with one simple pole.

Please note that there are complex two-dimensional manifolds which have no non-constant meromorphic functions. So it might be possible that there are no non-constant meromorphic functions on T. But there are many, and they are called, classically, elliptic functions.

Creating a silly elliptic function

We look at a function and "average it" by L. In this case, consider

G(z)=SUMn+m i in

L1/(z-(n+m i))7. Let's see why this function

converges "suitably". If A is fixed, then let's split the sum:

G(z)=SUMn+m i in

L1/(z-(n+m i))7=

SUMIn+m i in L1/(z-(n+m i))7+

SUMIIn+m i in

L1/(z-(n+m i))7 where the first sum is over

those n+mi which are inside D(0,2A) and the other sum, the "infinite

tail", is over those which are outside. The infinite sum can be

estimated:

|SUMIIn+m i in

L1/(z-(n+m i))7|<=

SUMIIn+m i in

L1/|z-(n+m i)|7.

For z in D(0,A), this is overestimates (using the triangle inequality)

by

SUMII(1/2)(n2+m2)-7/2.

But this last sum converges. So by the Weierstrass M-test, we know

that G(z) in D(0,A) is SUMI, a rational function

having a pole of order 7 in each period parellelogram, plus a

holomorphic function. Thus G(z) does converge to a non-trivial

elliptic function, with a pole of order 7 on T.

We generalized this reasoning: 7 is not special. By using a two-dimensional integral test, we saw that for degree 3 and above, the analog of G(z) will then converge.

The most famous elliptic function

This is analog of G(z) when the exponent is 2. But then the sum we

needed using our method doesn't converge absolutely! Weierstrass

defined his P-function (the P should be a German fraktur

letter) in the following way:

P(z)=1/z2+SUM'[1/(z-(n+im))2]-[1/(n+im)2].

Here the ' in the sum indicates that we add over all n+im in L

except for the n=0 and m=0 term. Then this sum does converge

suitably. Why is this? The difference

[1/(z-(n+im))2]-[1/(n+im)2] does cancel out the

worst part of the singularity at n+im. If you do the algebra, the

difference (when |z| is controlled) is not

O{(sqrt(n2+m2))-4} which diverges,

but is less.

It turns out that P'(z)=P3+(constant)P+(another constant). This result is proved using the Residue Theorem and fairly simple manipulations. Then more can be shown: an element f of M(T) can be written as R(P)+Q(P)P', where R and Q are rational functions in one variable. Thus the field M(T) is an algebraic extension of degree 3 of a purely transcendental extension of C. Wonderful!

My next and maybe concluding goals in the course are to verify suitably broad versions of Cauchy's Theorem and the Residue Theorem. I wished everyone good luck in their algebra exam.

Wednesday, November 3

I will continue to provide a gloss of the notes I wrote a decade ago. According to the online dictionary, gloss means

1. [Linguistics][Semantics] a. an explanatory word or phrase inserted between the lines or in the margin of a text. b. a comment, explanation, interpretation, or paraphrase. 2. a misrepresentation of another's words.(It certainly can't be the second definition.)

PDF of page 10 of my old notes

I wanted to show that elements of LF(C) map {lines/circles}

transitively. Let me try to say this more precisely. If W is a subset

of the complex numbers which happens to be a (Euclidean) line or

circle, and if T is in LF(C), then I wanted to show T(W) is a

(Euclidean) line or circle. In fact, even this is inaccurate, because

I need to look at the closure of W as a subset of CP1. In

CP1, a "straight line" has infinity in its closure, and if

you look at the stereographic picture, lines are just circles on the

2-sphere which happen to go through infinity.

As I mentioned in class, a direct proof, computing everything in sight, is certainly possible. It might be easier to understand the proof if we examine the structure of LF(C). It is a group. And so, taking advantage of this group structure, we need only verify the statement to be proved for generators of this group, since it will then be true for all products of these generators.

PDF of page 11 of my old notes

On this page I complete the proof that the elements suggested as

generators of LF(C) indeed were such, and I began to describe the

elements of the set {lines/circles} in what I hoped was a useful way.

Note for later comments, please, that the

description given (see the bottom of page 11 and the top of page 12)

depends upon 4 real parameters.

PDF of page 12 of my old notes

Now we compute how the generators found for LF(C) interact with the

description of {lines/circles}. Translations and dilations are

simple. It is worth pointing out, as I tried to, that inversion in a

circle (a point goes to another point, and the result is on the same

line with the center of the circle, with the product of the distances

to the center being the square of the radius) preserves circles. (The

fixed point set of such an inversion is exactly the circle itself.)

The map z-->1/z is inversion in the unit circle foloowed by reflection

across the real axis.

PDF of page 13 of my old notes

I went on a bit now, which I did not in the notes. I remarked that

Euclid "proved" that three distinct points in the plane were contained

in a unique element of {lines/circles}. An efficient proof, I think,

would use linear algebra. However, I can't immediately see a simple

reason that the

determinant of

( a conjugate(a) a*conjugate(a) ) ( b conjugate(b) b*conjugate(b) ) ( c conjugate(c) c*conjugate(c) )should not be zero. But, with this Euclidean observation in mind, we then see immediately that the elements of LF(C) act transitively on the set {lines/circles}: any {lines/circles} can be taken to another.

I think n then paused for examples. Let's see: I transforms the boundary of the unit disc, |z|=1, to |z-{3/2}|=1/2 and then changed that circle to something line |z-5|=2. I noted that although circles (and lines) get changed as proved, the center of a circle does not necessarily get moved to the center of the corresponding circle. If this were assumed, other computations would be simpler but also would also be wrong.

I tried to map the region between the imaginary axis and the circle |z+1|=1 (a circle with center at -1 and radius 1, tangent to the imaginary axis) to the interior of the unit circle. We found that 0 had to be mapped to infinity. And we further discussed the situation.

A parameter count

LF(C) is triply transitive on CP1, and, in fact, each

element of LF(C) is determined by its values on three distinct points

(this can be used to define the cross

ratio, an interesting invariant attached to LF(C)). But 3 complex

parameters is the same as 6 real parameters. An element of

{lines/circles} is determined by 4 real parameters as was previously observed. What happens to the extra

numbers? Of course, this is the same as asking what subgroup of

LF(C) preserves a particular element of {lines/circles}. Let's look at

R and ask which T's in LF(C) preserve R. We can guess some T's such

that T(R) is contained in R

(and thus T(R) would have to be equal to R!). T(z)=z+1, or even

T(z)=z+b, for b real. Thus if T(0) is not 0, we can pull T(0) back to

0 with a reverse translation (I am trying to build up enough elements

to generate the subgroup!). If T(0)=0, then T(1) is something else,

say, T(1)=a, with a not zero. Thus we now have the subgroup of T's

given by the formula T(z)=az+B with a not 0 and a and b

real. But, wait, we have ignored a prominent member of R's closure in

CP1: infinity. So far we know T(infinity)=infinity. But

that need not be the case. Look at T(z)=1/z, which surely preserves R

(in CP1!). If we now think about it, we've got

everything:

The collection of elements of LF(C) which preserve R is generated by

az+b (a,b real, a not 0: the affine group) and z-->1/z.

The parameter count is now correct, since a and b are the two extra

parameters we lacked. The z-->1/z is an extra transformation, and

it exchanges the upper and lower half planes.

Because of z-->1/z as in the last example, if your interest is mapping regions to regions (this often happens in complex analysis and its applications) then you must check that the appropriate interiors are mapped correctly. Now again, try to map the region between the imaginary axis and the circle |z+1|=1 (a circle with center at -1 and radius 1, tangent to the imaginary axis) to the interior of the unit circle. If we take z-->1/z, then the imaginary axis becomes the imaginary axis, and the circle |z+1|=1 becomes Im z={1/2}. The domain which is our region of interest is mapped to the strip between these lines (so 0<Im z<{1/2}) but officially we do need to check, at least minimally!

I played some simple linguistic games discussing mappings

of CP1.

Monday, November 1

As papers covering a table were moved recently in my home, a fortuitous discovery occurred: I found notes written for a previous (1994) instantiation of Math 503. I found only two objects related to Math 503: the notes for a lecture about projective space and the final exam. I tried to give a lecture today following the notes. Although I talked very fast, I could not come near finishing the lecture! I will try to scan the notes tomorrow and display them here, together with some commentary. It was fun to see what I thought was important a decade ago, and contrast this with my current prejudices (opinions?).

PDF of page 1 of my old notes

Discussed why one wants projective space. A reference is made to

Bezout's Theorem, a classical result in algebraic geometry. Like many

classical results in that area, Bezout's Theorem is more of an idea

rather than just one theorem. Here are two sources for further information, one written by

Professor Watkins, an economics faculty member at San José

State, and one

written by Aksel Sogstad of Oslo, Norway.

PDF of page 2 of my old notes

Here's the description of the projective space of a vector space. The

idea is simple and useful.

PDF of page 3 of my old notes

This presents a way to get the topologies on the projective spaces

when the field is R or C. The spheres "cover" the projective

spaces. In the case of R, the "fiber" of the mapping is two points,

+/- 1, while for C, the "fiber" (inverse image of a point using this

covering map) is a circle (corresponding to ei theta.

PDF of page 4 of my old notes

We meet the homogeneous coordinates of a point and concentrate

on CP1. We find that C itself can be embedded (1-1 and

continuously) into CP1, and only one point, [1:0], is left

out. We'll call this point, infinity.

PDF of page 5 of my old notes

We learn that CP1 is the one-point compactification of

C. It is also called the Riemann sphere.

PDF of page 6 of my old notes

Stereographic projection is introduced, although the author does not

mention the conformality of this mapping. Stereographic projection

does help one to identify S2 and CP1, at least

topologically. We also learn how to put the unit sphere and the upper

half place into the Riemann sphere. The Hopf map is mentioned. Here is a

Mathworld entry on the Hopf map, including a "simple" algebraic

description (formulas!). And here's

another reference even including formulas and pictures. There is

even a reference

to the Hopf map and quantum computing available! S3 is

not the product of S2 and S1 but some

sort of twisted object (very analogous to groups, subgroups, and group

extensions which are not direct products!).

PDF of page 7 of my old notes

The 2-by-2 nonsingular complex matrices, GL(2,C), permute the

one-dimensional subspaces of C2. Therefore each such matrix

gives an automorphism of CP1. It isn't too hard to show

that this mapping is continuous. There are certain elements of GL(2,C)

which give the identity on CP1. These are the (non-zero)

multiples of the identity matrix. The quotient group,

GL(2,C)/[aI2, a not 0], acts on CP1 by linear

fractional transformations. The group of such mappings will be

called here, LF(C). It is also called the group of Möbious

transformations.

PDF of page 8 of my old notes

Lots of technical words and phrases: group acting on a set;

homogeneous action; orbit; stabilizer; transitive action. All of these

things seem difficult for me to remember abstractly, so I like having

explicit examples of them. Wow, the LF(C) and CP1 setup

gives many many examples. For example, LF(C) acts triply transitively

on CP1: given two triples of distinct points in

CP1, there is a unique mapping in LF(C) taking one triple

to another.

PDF of page 9 of my old notes

A discussion of the proof of triple transitivity is given. This is

really a neat fact, and can be used computationally very

effectively. In the proof, I remarked that the stabilizer of infinity

in LF(C) was the affine maps, z-->az+b where a is any complex number

except for 0.

This is about where we stopped. There is more to follow! I hope to scan in all the pages tomorrow morning. Also, I have learned that the algebra exam has been postponed for one meeting, so I would like the complex analysis exam to be similarly postponed for one meeting.

Wednesday, October 27

I will give an exam on Monday, November 8. I will try to return homework that's been given to me on Monday, November 1. I hope to have a homework session on Thursday, a week from tomorrow. I also hope to have some problems that may be on the exam and give students copies, also.

I verified that a pole has the following property: if f has a pole of order k at a, then for every small eps>0, a deleted neighborhood of radius eps centered at a is mapped k-to-1 onto a neighborhood of infinity. Here a neighborhood of infinity is the complement of a compact subset of C. This is proved by looking at 1/f(z) for z near a.

I sort of proved the Casorati-Weierstrass Theorem, as in the book. The proof is so charming and so wonderful, for such a ridiculously strong theorem. Here is a consequence of Casorati-Weierstrass: if f has an essential singularity at a, and if b is a complex number or is infinity, then there is a sequence {zn} of complex numbers so that zn-->a and f(zn)-->b.

These are wonderful results. We should go on to chapter 2. Chapter 2 of N2 begins with the definition of a manifold. So I tried to define manifold. Here we go:

Preliminary definition

A topological space X is an

n-dimensional manifold if X is locally homeomorphic to

Rn. So we go on: X is locally homeomorphic to Rn

if for all x in X there is a neighborhood Nx of x in X and

an open set Ux in Rn and a homeomorphism

Fx of Nx with Ux.

I call this preliminary because there are some tweaks

(one dictionary entry for "tweak" is "to adjust; fine-tune") which we

will need to make as we see examples. By the way, the triple of

Fx of Nx with Ux is called a

coordinate chart for X at x.

Some examples

Suppose f:R2-->R and X=f-1(0). At least some

of the time we will want X to be a 1-dimensional manifold.

Good f(x,y)=x2+y2-1. Then X is a circle,

and certainly little pieces of a circle look like ("are homeomorphic

to") little pieces of R.

Bad f(x,y)=xy. Then X=f-1(0) is the union of the x

and y axes. This does look like a 1-manifold near any point which is

not the origin, but there's no neighborhood of 0 in X which looks like

a piece of R. As Mr. Nguyen said, if

these are homeomorphic, then an interval in R with the point

corresponding to 0 in X deleted should be homeomorphic to X with 0

taken out. But the number of components (a quantity just defined in

terms of open and closed sets, so homeomorphism should preserve it)

should be the same, and 4 is not the same as 2. So this X is not a

1-manifold.

Of course we could take more bizarre f's (hey: just f(x,y)=0 is one of

them) but I am interested in C1 f's, for which a sufficient

condition is guaranteed by the I{nverse\mplicit} Function Theorem

(IFT).

If f:Rn-->R is C1 and if every point in X=f-1(0) is a regular point for f (that is, the gradient is never 0 on f) then X is an (n-1)-dimensional manifold. This result followes from the IFT.

Changing the definition: first addition

Here is a picture of one example. Take R and remove, say, 0. Now the

candidate X will be R\{0} together with * and #, which are two points

not in R. I will specify the topology for X by giving a neighborhood

basis for each point. If p is in R\{0} then take as a basis all tiny

intervals of R containing p. For each of * and #, take

(-eps,0)U(0,eps) and either of * or #. Then this X is locally

homeomorphic to R1 but having two points where there should

be just one (* and # instead of 0) seems wrong to most people. So

additionally we will ask that X be Hausdorff.

Here is a picture of one example. Take R and remove, say, 0. Now the

candidate X will be R\{0} together with * and #, which are two points

not in R. I will specify the topology for X by giving a neighborhood

basis for each point. If p is in R\{0} then take as a basis all tiny

intervals of R containing p. For each of * and #, take

(-eps,0)U(0,eps) and either of * or #. Then this X is locally

homeomorphic to R1 but having two points where there should

be just one (* and # instead of 0) seems wrong to most people. So

additionally we will ask that X be Hausdorff.

Changing the definition: second addition

The long line is discussed in an appendix to Spivak's Comprehensive

Introduction to Differential Geometry. The exposition there is

very good. I'll call the long line,

L. This topological space is locally homeomorphic to R,

but is very b-i-g. It is made using the first uncountable ordinal and

glues together many intervals. L is a totally ordered

set (think of it as going from left to right, but goes on and on very

far). L has the following irritating or wonderful

property. If f is a continuous real-valued function on

L then there must be w in L so that for

x>w, f(x)=f(w). That is, eventually every continuous real-valued

function on L becomes constant! This is sort of

unbelievable if you don't know some logic. Please at some time

in your life, read about L. Well, most people do not

like this property. They find it quite unreasonable. L

is too big. So some control on the size of an n-manifold should be

given. Here are some formulations giving an appropriate smallness

criterion:

Real definition ...

A topological space X is an

n-dimensional manifold if X is locally homeomorphic to

Rn and is Hausdorff and is appropriately small (say, is

metrizable).

But in fact much more is possible. We can make Ck manifolds by requiring that overlap maps have certain properties.

Overlaps

Overlaps

Suppose p and q are points on X and the domains of coordinate charts

for p and q overlap. That is, we can suppose that Nq and

Np have points in common. Let W be that intersection. Then

W is a subset of X. The composition

Fp-1oFq maps Fp(W),

an open subset of Rn, to Fq(W), another open

subset of Rn. Therefore classical advanced calculus applies

to this overlap mapping.

We say that X is a Ck manifold (differentiable manifold) if all overlaps are Ck. Here k could be 0 (continuous, no different from what we are already doing), or some integer, or infinity, or omega. The last is what is conventionally written for real analytic.

If n is even dimensional, say 2m, we could think of Rn as Cm, complex m-dimensional space, and we could require that the overlaps be holomorphic. Then X is called a complex analytic manifold. The example of interest to us is m=1.Then X is called a Riemann surface.

A mapping G:X-->Y between manifolds is called Ck or holomorphic or ... if the appropriate compositions of chart inverse with G with chart maps are all of the appropriate class.

Example 1 of a Riemann surfaces

An open subset of C is a Riemann surface. Here there is one coordinate

chart and the mapping is just z-->z. So this doesn't seem very

profound.

Example 2 of a Riemann surfaces

| Here things will be a bit more complicated. We start with two open subsets of C, U and V as shown. I draw them on different (?) copies of C for reasons that will become clear. However, U and V share some points, shown as W. W is one component of the intersection of U and V: it is not all of U intersect V. Now I will define X. We begin with a set, S, which is Ux{*}unionVx{#}. This is just a definite way to write the disjoint union of U and V. Now I will define an equivalence relation on S. Of course the equivalence relation includes the "diagonal" (everything is equivalent to itself). The only additional equivalences are: (a,*)~(b,#) if a=b and a and b are in W. X will be S/~: I am "gluing together" in a very crude way U and V along their overlaps. I claim that X is a Riemann surface. First, X has a topology which exactly has as neighborhood bases the open sets of U and V (on the boundary of W, I will take the little discs that are half in U (or V) and half in W). There's obvious coordinate charts (just z again!). This is Hausdorff if you think about it. And X is metrizable (it is even more clearly separable). I would put a metric on it locally by looking at the usual distance in C. But I would make the distance between points on the "other" component of U intersect V go "around" 0. Then this is a Riemann surface. |  |

|

On the Riemann surface X,

z is a holomorphic function. In fact, you could imagine, just imagine,

that we put X into R3 homeomorphically as shown. Then z

could be thought of as some sort of projection map, pi, which takes

(x,y,w) in R3 to x+iy in C. And the image of X would be a

sort of square annulus, say call it Y, in C. Why would one want to look at creatures like X? Well, on both X and Y, z is a holomorphic function. On Y, z has no holomorphic square root. We can't quite prove that yet (the homework assignment had inside hole of 0 diameter) but we will be able to, soon. But what is very amazing is that z does have a holomorphic square root on X. I will even write a formula for it next time. Well, here's a formula:

|

|

Monday, October 25

Laurent series exist and are unique

I dutifully copied from N2. We saw that if f

is holomorphic in A(a,r,R), then

f(z)=SUMn=-infinityinfinitycnzn

where cn=1/{2Pi i}![]() |z-a|=rf(z)z-n-1dz. We saw that the

series converged absolutely in the annulus, and uniformly on any

compact subset of the annulus.

|z-a|=rf(z)z-n-1dz. We saw that the

series converged absolutely in the annulus, and uniformly on any

compact subset of the annulus.

Examples (?)

We look at 1/{(z2+1)(z-2)} with center at -2i. We saw there

were four possible Laurent series, all valid in different annuli. I

tried to show how to get these series (use partial fractions,

manipulate the results with the geometric series). I then asked what

we could do with ez/{(z2+1)(z-2)} and explained

why these series would be difficult to get.

Isolated singularities

I tried to present the most famous results about isolated

singularities. f has an isolated singularity at a if f is holomorphic

in A(a,0,r) for some r>0. A first rough classification of f's

behavior at a is the following: let N=inf(n such that cn

(in the Laurent series) is not 0}. I will assume that f is not

the zero function here. Then there are three cases.

| N>=0. In the annulus, f is equal to the sum of a power series, and hence extends holomorphically to the filled-in annulus, D(a,r). This is a removable singularity. | We actually saw you could do a bit more than this, with, say, |f(z)|<Const(|z|-1/2). Or what if f were locally L2? | ||

| If N<0 but is finite. Then (rewrite the Laurent series) f(z)=zNg(z), where g(z) is a sum of a convergent power series in D(a,r) with g(0) not equal to 0. This is a pole: the limit of |f(z)| is infinity as z-->a. | Even more is true (but not yet proved here): a meromorphic function can always be written as a quotient of two holomorphic functions. |

||

| N=-infinity. This is called an essential singularity. | Even more is true (but will not proved here): a result of Picard states that the image is all but at most one complex number! |

||

Wednesday, October 20

I will try to give an exam after we finish Chapter 1 (Laurent series, local theory of isolated singularities, etc.

There is one further "general" result in the theory of convergence of holomorphic functions which I will mention at this time, and that's a result (or several results!) attributed to Hurwitz.

Weierstrass Montel Vitali Osgood now Hurwitz