Diary for Newer Math, fall 2003

| Date | What happened (outline) | ||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Thursday, December 11 |

Mr. Burton discussed Euclid's algorithm and the binary algorithm for the GCD. Mr. Wilson added further information, and related the Fibonacci numbers to the GCD computation. Mr. Jaslar discussed the solution of the VjN problem and related matters. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, December 8 |

Mr. Tully discussed randomness,

giving some of the history and comparing various "definitions" of

random. Mr. Siegal discussed various aspects of coding theory, and computed certain numbers expressing the efficiency of a code for repetition codes, parity codes, two Hamming binary codes (the (7,4) and (8,4) codes), and a Hamming (4,2) ternary code. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, December 4 |

Ms. Jesiolowski discussed the history and some of the mathematics of the Four Color Theorem. She included some information on the controversy about computer-aided proofs. An invited guess, Professor Zeilberger, who has strong views about these matters, generously joined us for half the period. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, December 1 |

Mr. Palumbo discussed P vs. NP, NP-completeness, satisfiability of Boolean cirucits as an NP-complete problem, and minesweeper (!) as another example of an NP-complete complete. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Tuesday=Thursday, November 25 |

The lecturer's concluding remarks about the course. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, November 24 |

Mr. Ryslik discussed the knapsack encryption system. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, November 17 |

I urged students to work on their presentations and papers.

I briefly discussed Bell Labs, where much of what I will speak about in the next few meetings will be discussed. I talked a bit about Shannon whose two papers published in the Bell System Technical Journal, entitled A Mathematical Theory of Communication founded the fields which are today called information theory and coding theory. In the paper is a citation of a coding method of Richard Hamming, who was concerned about error-correction in the early years of digital computers. I hope to discuss that specific method on Thursday. By the way, here is a transcript of a wonderful talk Hamming gave on "You and Your Research". He was quite outspoken. And here is Hamming's original article about error detecting and error correcting codes. Coding theory deals with the reliable, economical storage and transmission of information. Under "storage" are such topics as compression of files. Problems concerned with "transmission" include how to insure that the receiver gets the message the sender transmits down a noisy channel: a channel where the bits may flip. I mentioned ISBN numbers (on each book) as an example of an error-detection scheme. We discussed repeating bits as a way of insuring the message. We got started on inequalities which must be satisfied for accurate transmission of messages with error-correction built in. I'll do more Thursday. There are many links to error correcting codes on the web. Here's a short one and here's a whole book!> | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, November 13 |

Professor Kahn spoke about correlation inequalities. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, November 10 |

I announced that another faculty member from the Mathematics

Department would come and speak. He is Professor Jeff Kahn, and he

will be here at the next class. Perhaps Professor Kahn's best known work on on the Borsuk Conjecture, which states that every subset of Rd (d-dimensional space) which has finite diameter can always be partitioned into (d+1) sets with smaller diameter. The conjecture was made in 1933, and sixty years later Professor Kahn helped to show that it was false in high dimensions, in fact, spectacularly false: when d is large, one may need at least (1.1)sqrt(d) pieces. Specific examples were provided for d=9162, showing that we don't know much about high dimensions. The lowest dimension currently known for a counterexample is 321, work which was done in 2002. Borsuk's Conjecture and related issues turn out to be rather important in many applications. Professor Kahn wants to discuss some more current work, and asked me to present several topics to you.

Bipartite graphs One nonexample is K3 which is not bipartite, because if we divide the 3 vertices into two groups, one will have two vertices and there will be an edge between them. An example of a bipartite graph is K3,3 which consists of 3 homes (Fred, Jane, Alice) and 3 utility states (water, gas, electric) and the edges connect the utility stations and each of the homes. Mr. Burton successfully identified a way to recognize bipartite graphs. Consider a cycle: that is, a path in a graph which returns to itself. A graph will be bipartite if it has no cycle of odd length. In fact, this allows us to "construct" the bipartition: pick one node. Make V1 the collection of nodes which can be connected to the initially picked node by an path with an even number of edges, and V2 those which can be connected with an odd number of edges (for simplicity, we are assuming that all nodes can be connected to each other, or else we apply this to the separate pieces of the graph). Then the absence of odd cycles leads to recognizing that this makes the graph bipartite. I also took the change to quote a theorem of Kuratowski from the 1930's, that a graph can be drawn in the plane with no edges crossing ("a planar graph") exactly when neither K5 nor K3,3 can be realized as a subgraph. (There are some technicalities I am omitting in this, but it is essentially correct.)

Conditional Probability The most interesting simple result about conditional probability is called Bayes' Theorem (dating back to 1753, apparently!). One version of Bayes' Theorem is the following: p(A|B)=[p(B|A)p(A)]/[p(B|A)p(A)+p(B|Ac)p(Ac)]. Here Ac is the complementary event (every outside of A). We analyze the right-hand side of B' T first. The bottom, p(B|A)p(A)+p(B|Ac)p(Ac), is, by definition of conditional probability, p(B and A)+p(B and Ac), and since A and Ac are all outcomes, this is p(B). The top, p(B|A)p(A), is [p(B and A)/p(A)]·p(A) which is p(B and A). So the resulting quotient is p(B and A)/p(B) which is what the left-hand side of Bayes' Theorem states. I gave some silly application of Bayes' Theorem (male/female and smoker/non-smoker facts). Bayes' Theorem and conditional probability are basic to much of modern statistics. There are many references on the web to this material. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, November 6 |

Professor Jozsef Beck spoke about "games" with complete information, with many games having Ramsey theory as a framework. He spoke about avoidance games and achievement games, and maker/breaker games. He stressed the idea that there could exist winning strategies without any description of them! | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, November 3 |

I discussed the probabilistic method which allows certain underestimates of Ramsey numbers. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, October 30 |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, October 27 |

We discussed graphs and found some real-world graphs. We discussed coloring graphs, and proved some facts about coloring complete graphs. Much of this is in the Governor's School notes, but not all of it (come to class!). We will continue the discussion on Thursday. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, October 23 |

I began consideration of the idea that large enough samples will

always have some small-scale order. This is done in the Governor's

School notes on the pages on and after page 57. In particular, I

discussed the pigeonhole principle. I remarked that its results

can sometimes be difficult to accept: they can be non-constructive, so

no solution is exhibited. One just gets knowledge that a solution

exists.

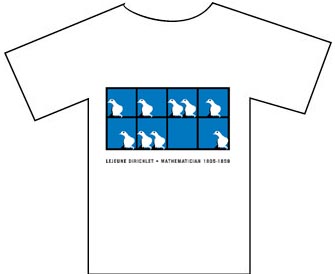

After what looked like merely amusement (see the notes referenced above) I proved a theorem (although as usual I fouled it up a bit and Mr. Jaslar helped me). The illustrated t-shirt is from the Australian Mathematics Trust, and it shows pigeons in pigeonholes, and the lettering (not too readable here) names Dirichlet, who first really identified and used the pigeonhole principle. The same source states:

Here is a further discussion of problems relating to the pigeonhole principle, including a clear proof of the "approximation to irrationals" statement which I tried to state and verify. Please look at this page. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, October 20 |

We discussed the topics. I then

discussed birthdays and tried to contrast probabilistic reasoning with

the certainly available in large enough samples.

I then began discussing parties, and Mr. Burton tried to 2-color a graph with 5 edges so that there was no monochromatic triangle. Ms. Jesiolowski found a nice solution. We will return to these problems on Monday. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, October 16 |

I finished the discussion of the Broadcasting Model, including one

proof in detail using mathematical induction.

Professor Paul Leath of the Physics Department discussed percolation and the ingenious experiment documented in his paper, Conductivity in the two-dimensional-site percolation problem, in Phys. Rev. B 9, 4893-4896 (1974). (The link works on browsers from Rutgers-sponsored computers.) Monday we will discuss the topics for papers. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, October 13 |

I began the discussion of the Broadcasting Model: pp.48-50 of the notes. This including simulation of the binary tree network, and analysis of what the limiting probabilities could be, if they were shown to exist. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, October 9 |

Discussion of the transmission model (see pages 46 and 47 of the notes).

Discussion of the binomial theorem. "Proof" of the fundamental relationship among binomial coeffiencients (Pascal's Triangle) using the idea that of looking at n+1 elements following a suggestion of Mr. Jaslar, distinguishing a special element, and then looking for k element subsets which do and do not have the special element (so the n+1,k binomial coefficient will be the sum of the n k and n k+1 binomial coefficient). | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, October 6 |

I discussed expectation. I essentially discussed pages 41-45 of these

notes.

We discussed (as in the reference above) the coin flipping protocol apparently due to John von Neumann. I requested comment on a converse problem, which I named NvJ as a joke. In the NvJ problem it seems easy to get a protocol which works if the probability p is rational, a quotient of integers. So it seemed appropriate to give Euclid's other well-known proof, that sqrt(2) is irrational. I then remarked that a similar proof would show that sqrt(3) is irrational, and asked what would go wrong if we wanted to prove that sqrt(4) was irrational using the same technique! | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, October 2 |

I explained that the list of topics would be steadily updated and

augmented.

I tried to discuss problems with the mathematical model called probability. I reviewed what we did Monday, and then discussed three examples in detail, also along the way "reviewing" certain mathematical results. One further definition: Two events E and F are independent if p(an outcome in both E and F occurs)=p(E) p(F): the probabilities of the intersection multiply. Of course, one can't argue with definitions, but in this case we are supposed to see that somehow two separate "games" are being run, and the outcome in one doesn't influence the outcome in the other. For example, pick a card from a standard deck. The events E={the card is an ace} and F={the card is a spade} are independent. If G={the card is the club ace} then neither E and G nor F and G are independent.

Example 1 p(Empty Set)=0; p({H})=1/2; p({T})=1/2;p({H,T})=1. It isn't too hard to see that this assignment of probabilities satisfies all the requirements of the rules. In fact, we really won't run into conceptual difficulties with any finite sample space, S. I do admit that one can have computational difficulties (for example, offhand I don't know the probability that a 5-card poker hand is a straight!). But no "philosophical" problems seem to occur.

Example 2 {T,HT,HHT,HHHT,...,HnT,...,Hinfinity} Here we need to do some explaining. Each element of the sample space represents a sequence of tosses, read from left to right. For example, HHHT represents the outcome of three heads followed by a concluding tail. The notation HnT, with n here a non-negative integer (so n is 0 or 1 or 2 or ...), I'll use Hinfinity to represent the outcome of a sequence of H's, tossed endlessly. (Please see the first scene in Rosencrantz and Guildenstern are dead by Tom Stoppard to put this game in a proper dramatic setting.) What should the probabilities for each outcome be? If the coin is fair, then p({T})=1/2. If the tosses are independent, then this suggests that p({HT})=1/4, p({HHT})=1/8, etc., and p({HnT})=1/2n+1 since we are in effect prescribing n+1 independent fair flips. Now we need a fact about geometric series. Recall, please:

Now back to the principal discussion. Consider the event which is all of the HnT tosses. What should its probability be? It should be the sum of the geometric series with a=1/2 and r=1/2, and this is 1/2/(1-1/2)=1. But then since the probability of the whole sample space must be 1, the probability of Hinfinity has got to be 0. Or, another way, for those who know and like limits, this is a prescribed infinite sequence of independent fair flips, so its probability is limn-->1/2n=0. In any case we seem to have a possible or a conceivable event (namely, { Hinfinity}) which has probability 0. What does this mean? I guess it means it almost never happens, or that the other outcomes almost always happen? The phrase "almost always" is used in probability theory for an event which has probability 1, and this is an example of such an event. This is part of the phenomenon of infinity, and things can get even worse.

Example 3 The first "random" number was something like 1/7. Well, golly: the event of picking 1/17 (just call it {1/17}) is certainly inside the event of picking a number between 1/17-1/100 and 1/17+1/100. But the latter event is an interval with length 2/100=1/50. So p({1/17})<=1/50. In fact, we can show that p({1/17}) is <= any positive number. The only value we can assign is that p({1/17})=0. So here is another event of probability 0 which can actually happen (!?): or can it? In fact, the probability of picking any one single number uniformly at random from [0,1] is 0. I then examined the next three or four "random" numbers contributed by students. It seemed that the numbers picked were all rational: quotients of integers. This exhibited another phenomenon, a phenomenal result which is attributed to Cantor in 1873.

The equal "size" or cardinality (to give it the technical name) of these sets is not at all supposed to be obvious, and in fact Cantor's demonstration of this fact and other analogous results were very startling to the mathematical community of his day. So now we know that there is some kind of correspondance: if n is a positive integer, then there can be a rational qn corresponding to n. The correspondance can be described in such a way that the collection of all of the qn's is all of the rationals in [0,1]. I will now try to convince you that the event W which is described by "picking a rational number uniformly at random from [0,1]" has small probability. Look at qn. Imagine this is inside the interval with endpoints qn-1/100n and qn+1/100n. Now the length of this interval is 2/100n. The event W is containing in the union of these intervals, so that the probability is at most the sum of the probabilities of the outcome being in each interval. But that's the sum of 2/100n with n=1, 2, 3, ... this is again a geometric series with a=2/100 and r=1/100. Then the sum is 1/(1-r) which is 2/99, fairly small. Therefore p(W)<=2/99. You can see that by adjusting the length of the including intervals to be even smaller, you can make the probability of W as small as you want. The only value p(W) can have is 0. Wow. Please note that I applied this logic to W just to show you that the probability of picking a rational number uniformly at random from the unit interval is 0. In fact, much more broadly I can assert (with the same supporting logic) the probability of picking one element of any specific sequence of numbers from [0,1] is 0. This is wonderful and strange. Please be on your guard and try to understand what's happening when we apply probability theory, a mathematical model, to situations which have infinitely many alternatives.

Notes | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, September 29 |

I gave out a list of topics students

could do presentations/papers about.

I completed an analysis of the Russian peasant/repeated squaring method of computing AB mod C. Write B in binary form. The 0 and 1 numerals will take up #2(B) digits. Now repeatedly square B mod C #2(B) times. And "assemble" AB mod C by multiplying together each of the squares -- this will need also at most #2(B) multiplications. Since the largest any of these numbers can be is C, the work for each multiplication will be at most 6(#10(C))2. We also know that #2(B)<=4#10(B). Therefore we need at most 8#10(B) multiplications and each of those needs 6(#10(C))2 operations. So this is a polynomial algorithm (degree 3). There are ways to speed up multiplication, and there are also even better ways under some circumstances than repeated squaring to do exponentiation. This is interesting in the "real world" because many crypto protocols use exponentiation of big numbers with big expnents. I discussed the history of probability beginning with some gambling games about 300 years ago. The current codification of probability "axioms" was done by Kolmogorov in the 1920's, so probability as an abstract mathematical field is relatively recent. Today was primarily devoted to explaining vocabulary words, with some useful simple examples.

We start with a sample space of outcomes, and assign a number in [0,1] to events, collections of outcomes. If E is an event, this number is called the probability, p(E), of the event. Then the empty event with no outcomes, has probability 0. The event of all outcomes, the whole sample space, has probability 1. Also, if A and B are disjoint events (sometimes called exclusive), with no outcomes in common, we require that the probability of an outcome being either in A or in B is the sum of the probability of A and the probability of B. In math language, if A intersect B = the empty set, then p(A union B)=p(A)+p(B). I drew some pictures (close to Venn diagrams, I guess). I also deduced that if A is a subset of B (so every outcome in A occurs in B) then the probability of A is less than or equal to the probability of B. I remarked that when the events A and B are not disjoint, then p(A union B) is less than or equal to p(A)+p(B). The "less than" part occurs because we have no information about p(A intersect B). More accurate equations can be written using what is called the "inclusion-exclusion principle" but we likely will not need them. We also analyzed John von Neumann's problem: given an unfair coin, simulate a fair one. That is, you are given a coin whose probability of heads is p where 0<p<1 and tails is q=1-p. By tossing this coin can you call out H and T in a fair way? We discussed the merits and possible defects of von Neumann's solution (there are other solutions). I did not make a formal definition of independence which is needed here. I will return to JvN. Here is a whole book (over 500 pages long!), quite good, on probability, available for free on the web. We won't need much from this book, though. Here is a brief more informal introduction to probability, more in the spirit of the lectures. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, September 25 |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, September 22 |

I decided to try to analyze what easy and hard as

these words could be applied to computational tasks. I

wanted my definition to be valid no matter how numbers were

represented. So we discussed representing numbers in the binary

system, instead of the decimal system. (I wanted to go for Roman

numerals, but people told me a base system was better.) I counted as 5

volunteers performed the binary dance (they stood up and sat

down corresponding to the binary values of the counts).

Then I asked if a number in standard decimal form when written is 5 feet long, estimate how long the base 2 (binary) representation will be. This was more difficult. We estimated that a decimal integer with a 5 foot long representation would have 600 digits, so it would be about 10600. About how many binary digits (bits) would such a number need? We discussed this. Since 23<10<24, by taking 600th powers, we know that the number will be between 21800 and 22400: if we assume the digits are similarly spaced, that means the binary representation will take between 15 and 20 feet. Thus big numbers in one base will also be big numbers in any other base. We then analyzed the complexity of some elementary operations of arithmetic: addition and multiplication. Please see pages 20 and 21 of the Gov's School notes. These operations depend on the length of the inputs. So I defined #(n) to be the number of decimal digits of n. Thus #(3076) is 4. If both A and B have #(A)=#(B)=k, then A+B can be computed in 2k unit operations (1 digit addition) and A·B can be computed in 6kk operations. These are both polynomial time algorithms. An algorithm (defined in the notes) is polynomial time if it takes at most a polynomial number of "operations" in terms of the size of the input to compute its answer. Addition and multiplication are examples. Multiplication seems to be harder because the standard algorithm is quadratic in terms of the input length, while addition is first degree or linear. A computational problem is easy if it has a polynomial time algorithm. A computation problem is hard if it does not have a polynomial time algorithm. We decided that if an arithmetic algorithm was polynomial time in one base, then it had to be polynomial time in any base since 3#2(n)<#10(n)<4#2(n) using the logic above, so polynomial time in #10(n) leads to polynomial estimates using #2(n).

We analyzed Greenfield's factoring algorithm. Here the input is a

positive integer n with size #(n) (back to base 10) and the output is

either "n is prime" or "n=i·j" where i and j are integers

between 1 and n. The "running time" for this is n26#(n)2. Note that this is not a polynomial in #(n). Indeed, the running time in terms of #(n) is 102#(n)6#(n)2. This algorithm is exponential in terms of running time. There is no known algorthm for factoring which is not exponential. However, if a suggested factorization ("n=i·j") is given, then we can check this in polynomial time (in fact, just 6#(n)2 steps.) You can win a million dollars by finding out whether every problem whose solutions can be check in polynomial time actually has a polynomial time algorithm for solving it. This is the P vs. NP problem. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, September 18 |

I stated Euler's improvement (where the modulus is a product of two

distinct primes) to Fermat's Little Theorem. I tried to be more

careful with the hypotheses. In particular, I needed to define the

phrase relatively prime. For this I first defined the Greatest

Common Divisor (GCD) of a pair of positive integers, a and b. So a

positive integer c is

the GCD of a and b (written c=GCD(a,b)) if c divides both a and

b and c is the largest integer which divides both of them. Please

notice that 1 divides both a and b, so the collection of common

divisors always has at least one element! We computed examples, such

as 1=GCD(5,7) and 4=GCD(4,8) and 1=GCD(8,27). Now a and b are

relatively prime if 1=GCD(a,b). So a and b do not share any

common factors except 1. Euler asserted that if a is relatively prime

to the distinct prime numbers p and q, then a(p-1)(q-1)=1

mod pq.

We tested Euler's theorem for 6=2·3. Here p=2 and q=3 so (p-1)·(q-1) is just 2. The Maple instruction seq(j^2 mod 6,j=1..5); asks the a sequence of values be computed as j runs from 1 to 5. The result is 1, 4, 3, 4, 1As I remarked in class, this is somewhat deceptive, since there are only two numbers in the range from 1 to 5 which are relatively prime to 6. But if we try seq(j^24 mod 35,j=1..34); where p=5 and q=7 we get 1, 1, 1, 1, 15, 1, 21, 1, 1, 15, 1, 1, 1, 21, 15, 1, 1, 1, 1, 15, 21, 1, 1, 1, 15, 1, 1, 21, 1, 15, 1, 1, 1, 1with lots of 1's! I asked if p and q are both primes which have about 100 decimal digits, what are the chances that an integer between 1 and pq is relatively prime to both p and q? The multiples of p and the multiples of q are the integers not relatively prime to p or q. So the list of "good" integers is the list of all integers from 1 to pq with the bad ones thrown away: 10200(that's about pq)-2·10100. So overwhelmingly most integers will satisfy the hypotheses of Euler's result. I then covered an introduction to RSA. Most of the material is on pages 16--18 of my Gov's School Notes. Please look there. I discussed various attacks on RSA. The best known is factoring. If one is given N=pq, a product of two primes, and if N can be factored with p and q then known, it turns out that RSA can be broken easily. There are other attacks, and the experience of having used the system for several decades. One reference is Boneh's paper (1999) surveying such attacks. Susan Landau has an article on the data encryption standard (DES) (2000) which may also be interesting. I will try to write some descriptions of projects this weekend which people can look at. I also will try to write an RSA homework assignment. And catch up on my sleep. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, September 15 |

I briefly discussed how secure communication (diplomatic,

military, business), usually mediated by computer, is accomplished

"today".

The messages to be passed between Alice and Bob are usually converted in some well-known way to a string of bits, 0's or 1's. These messages may represent text or music/sound or images or ... and typically they tend to be big chunks of bits. Alice and Bob want to exchange lots of information securely.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, September 11 |

I gave out some secret sharing

homework.

Topic 1 300 years ago ...

Topic 2 Classical cryptography

Topic 3 Key distribution

Topic 4 Crypto dreams ...

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Monday, September 8 |

I basically discussed what was on pages 7-10 of the Gov's School

notes. Here is the Maple information. I gave out shares in a secret mod P. Sharing secrets, mod PI have chosen a large prime number: P=1005005005001. All arithmetic in this problem will be done mod P.I also carefully created a polynomial, G(x), of degree 3. So G(x) "looks like" Ax3+Bx2+Cx+D where the constant term D is THE SECRET. I will give every student a "personal" and distinct share of the secret. Since G(x) has degree 3, at least four students will need to combine the information they have to find THE SECRET. The secret, although a number, also represents an English word. I have made a word using a simple process which the following table may help explain:

The English word SMILE becomes 19 13 09 12 05 which is more usually written 1913091205. This isn't a very convenient way of writing words, but it will be o.k. for what we do. Notice that you should always pair the digits from right to left, so that the number 116161205 will become 01 16 16 12 05 which is APPLE. The "interior" 0's will be clear but we need to look for a possible initial 0.

How to win this contest and get a valuable

prize

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Thursday, September 4 | Introduced myself, and discussed secret sharing (the

beginning of the Gov's School notes). I also sent e-mail to the whole

class. It contained references to

Adi Shamir's original secret sharing

paper and to some lecture notes on secret sharing from a computer science

course.

I gave out an information sheet. |