For this of course I needed to discuss simply connected: no holes, or the inside of any closed curve in the domain is also entirely in the domain. See pp.107-8 in the text.

I used the complex version of Green's Theorem for this result. See p.107 of the text. I remarked that the assumption of continuity for the derivative is not necessary. This is verified by Goursat's proof (see section 2.3.1) which is witty but will not be discussed by me, because I can't really see another use of the proof technique.

Then I went through the proofs of important theorems (see pp.107-111), including the Cauchy integral formula. I will continue with some of section 2.4 next time.

On Thursday

I will lecture from 6:10 to 7:10, take a 10 minute break, and then

lecture for another hour. I would like to do this for the next few

Thursdays and catch up with the other section.

HOMEWORK

Please hand in these problems on Thursday, March 24:

2.3: 1, 2, 4, 9, 10, 14.

Diary entry in progress! More to

come.

Tuesday, March 8

(No lecture!)

Thursday, March 3

(Lecture #13)

The object of today's class is to again look at power series: SUMn=0infinityanzn. Such series have a radius of convergence, R. Inside the radius of convergence, the series will converge to a sum, S(z), depending on z. This sum is a continuous function inside the radius of convergence. But more is true.

|

THIS IS NOT COMPLEX

ANALYSIS SO YOU CAN DISREGARD

IT!

|

I gave a good proof of the second fact above, that S(z) is differentiable, and that its derivative is the term-wise differentiated series. This is a technical proof, probably the most complicated to be done in the whole course. I did want to convince people that the result was true. The proof in the textbook actually is more-or-less the same, perhaps reordered in ways that I don't find so appealing. But basically you constantly compare to geometric series, you use the fact that [{S(z+h)-S(z)}/h]-S´(z) can be written nicely using the Binomial Theorem, and that the binomial coefficients are positive, so that things work well with the triangle inequality and rearrangement of series.

I did not give a proof or much of a discussion of the first claim, that the differentiated series had the same radius of convergence. The textbook's version is good, I think. Basically the assertion is that putting n in front of the terms of a power series doesn't much change how it converges (think of the ratio test!)

We can now apply our results again and again, to see that the sum of a power series can be differentiated as often as we want. And we can also see that if S(z)=SUMn=0infinityanzn, then by repeatedly differentiating and letting z be 0, the coefficients an must be Sn(0)/n!. So the power series is actually the Taylor series of its sum inside the radius of convergence.

Much more surprising things will occur!

If I know that F(z) is analytic, and that F´(z)=f(z), and I have a

curve C(t), for t in [a,b], then

![]() Cf(z) dz=F(C(b))-F(C(a)). That is, the line

integral of the derivative is the value at the END minus the value at

the start.

Cf(z) dz=F(C(b))-F(C(a)). That is, the line

integral of the derivative is the value at the END minus the value at

the start.

So now we have another method to evaluate line integrals. Start with

![]() C f(z) dz. IFwe know

C f(z) dz. IFwe know

For example, suppose f(z)=z5. This is an entire function,

and I know it is the derivative of the entire function

F(z)=[1/6]z6. Suppose C is any curve that starts

from 2-i and ends at -1-i. Then

![]() Cz5 dz=[1/6](2-i)6-[1/6](-1-i)6.

Cz5 dz=[1/6](2-i)6-[1/6](-1-i)6.

You should not suppose, though, that the restrictions about domain of

f(z) and F(z) including C can be disregarded. We know that

![]() unit circle(1/z) dz=2Pi i. Since if we

did have an f(z)/F(z) pair where f(z)=1/z, the integral over a closed

curve would be 0, we can conclude that there is no function

analytic in a domain which includes the unit circle whose derivative

in that domain is 1/z. "Locally" on pieces of the circle (in fact, any

piece of the circle which discards one point) we can in fact find such

an antiderivative. The function Log(z) is an antiderivative of 1/z,

where we discard -1 from the circle.

unit circle(1/z) dz=2Pi i. Since if we

did have an f(z)/F(z) pair where f(z)=1/z, the integral over a closed

curve would be 0, we can conclude that there is no function

analytic in a domain which includes the unit circle whose derivative

in that domain is 1/z. "Locally" on pieces of the circle (in fact, any

piece of the circle which discards one point) we can in fact find such

an antiderivative. The function Log(z) is an antiderivative of 1/z,

where we discard -1 from the circle.

|

THIS IS NOT COMPLEX

ANALYSIS SO YOU CAN DISREGARD

IT!

Consider the real function of a real variable defined in a piecewise

fashion by

Consider the real function of a real variable defined in a piecewise

fashion by

{ exp(-1/x) for x>0

f(x)= {

{ 0 for x<=0.

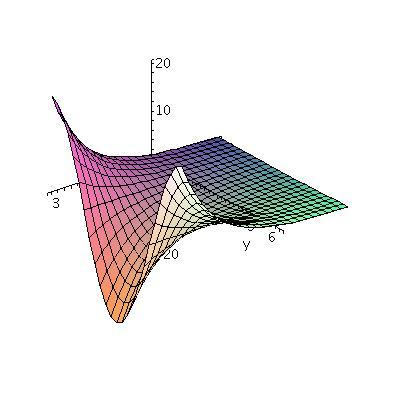

To the right is a Maple graph of this function. On the left,

of course we have a half line. Since the real exponential function of

negative reals is between 0 and 1, the graph on the right must be

between y=0 and y=1 (if x>0 it can't equal 0 since the exponential

function is never 0, and it can't equal 1 because 1/x can never be 0).

Of course, on the left all of the derivatives are 0. What about on the

right, where x>0? There

f´(x)=e-1/x[1/x2]. Since this is always

positive, we know that f increases. In fact, the right-hand limit at

0 of e-1/x "is" enegative infinity so it is

0. The function is continuous at 0, and therefore continuous

everywhere. As x-->infinity, e-1/x-->e0=1.

The Maple graph is totally justified.

I would like to investigate finer properties of the function f(x). In

particular, I would like to look at its differentiability at 0. The

left-hand derivative of f(x) at 0 certainly exists and is 0. What

about the right-hand derivative? For x>0, [{f(x)-f(0)}/{x-0}] is

the same as (1/x)e-1/x. What happens as x-->0+

to this? Well, this is a Calc 2 exercise in L'Hopital's rule. I guess

I would do it this way: I now know that f(x) is differentiable, and that its derivative is

{ (1/x2)exp(-1/x) for x>0

f´(x)= {

{ 0 for x<=0.

Let me do one more step. f´´(x) must exist away from 0 (I can use the product and Chain Rules on the right). What about f´´(0)? From the left again we see that the appropriate limit is 0. From the right, we officially need to consider [{f´(x)-f´(0)}/{x-0}] as x-->0+. Let's insert the formula for f´(x) and the value (just 0) of f´(0), so that we see we need to consider (1/x3)exp(-1/x) as x-->0+. But (w=1/x) this is w3/ew as w-->infinity, and the limit is 0. An official proof needs induction, etc., but I don't need no steenkin' official proof ... In fact, this function f(x) is infinitely flat at the origin. It and all of its derivatives exist at 0, and the values of the function and all of its derivatives at 0 are 0. Suppose we wanted a power series which would equal f(x), a power series centered at 0. Well, if the series work the way they should (and they do) then the power series will be a Taylor series for f(x) at 0. But all of the coefficients will be 0. So here's a function which can't be equal to a power series in any interval centered at 0, even though we can differentiate the function as much as we want. Nothing that bad occurs in complex analysis.

|

Elementary theory of power series

A power series centered at z0 is a sum of the form

SUMn=0infinityan(z-z0n

where all of the an's are complex numbers. Most of the time

I will write power series centered at z0=0 because I am

lazy. We say that the power series converges if the sequence of

partial sums has a limit. If the power series converges absolutely

(that is, the series

SUMn=0infinity|an| |z|n,

a sequence of non-negative numbers) then the series will converge. I

discussed this in lecture #4.

Radius of convergence

Suppose

SUMn=0infinityanzn

converges for some non-zero z (any power series will always converge

at its center, here when z=0). Then

the pieces of the series can't get large: that is, the max of

|an| |z|n is finite (or else the

successive partial sums, which should converge, will take bigger and

bigger "steps" from each other. Suppose this max is some number

V. Look at what happens if we take a complex number w with

|w|<|z|. Then

|SUMn=0infinityanwn|<=SUMn=0infinity|an| |w|n<SUMn=0infinity|an| |z|n[|w|/|z|]n.

Now look closely.

|w|/|z| is some number r with r<1. We concluded that the other "stuff",

|an| |z|n, is bounded by some big number,

V. So therefore we are left with the overestimate,

SUMn=0infinityV rn with r

a positive real number which is less than 1. But this series

converges. So the original series,

SUMn=0infinityanwn,

converges absolutely, and therefore converges.

Now we need to think about the situation a bit. As soon as you know the power series converges at a complex number, the series will converge absolutely at any complex number closer to its center. So the situation is like this: either the series converges for all complex numbers (perfectly possible, consider SUMn=0infinity[zn/n!]) or it converges for no complex number except for the center (perfectly possible, consider SUMn=0infinityn! zn]) or there are some complex numbers for which it diverges and some for which it converges. But then the computation we just made shows that if we consider the modulus of every complex number for which the series converges, then a circle (centered at the center of the power series) of such modulus must have all convergent numbers in it. Take the union of all those circles. The result will be a circle, and its radius is the radius of convergence.

Continuity of the sum

Look at

SUMn=0infinityanzn

and suppose it has radius of convergence R>0. Here "infinity" is a

possibility and infinity will be >0. Let's call the sum of this

series, S(z). If |z|<R, the series converges absolutely. Fix some z

inside the circle of convergence. Take some number r>0 so that

|z|+r is still less than R (possible -- you could take r=(1/2)[R-|z|],

for example). Then

SUMn=0infinityan(z+w)n

will converge absolutely for all w's with |w|<r. Not only that, but

if we compare "infinite tails" of series, we also know that

SUMn=Ninfinity|an| |z+w|n<SUMn=Ninfinity|an|(|z|+r|)n.

Since the series

SUMn=0infinity|an|(|z|+r|)n

converges, I can choose N so that the tail,

SUMn=Ninfinity|an|(|z|+r|)n

is less than epsilon. But then look at what we have left out:

SUMn=0N-1an(z+w)n. This

is a polynomial. If w-->0 this polynomial will eventually differ by

epsilon from its value at z, because polynomials are continuous.

Hey, I've just shown you that by neglecting an appropriate infinite tail, you can make the terms of a power series vary continuously. That means that the sum of the series, S(z), will be a continuous function inside its radius of convergence. This is dandy.

We reviewed and tried to understand what differentiability means

What's complex differentiability? Well, we want the limit

f(z+h)-f(z)

lim -------------

h-->0 hto exist, and this limit will be called

f´(z). Actually, the division sign makes this definition a

bit difficult to work with. It is easier to try to "unroll" the

definition in the following fashion:

f(z+h)-f(z)

------------- = f´(z)+Err(f,z,h)

hwhere Err(f,z,h) is an error term which depends

on f and z and h, and all I know about it is that Err(f,z,h)-->0 as

h-->0. We can then rewrite the equation and eliminate the division

and the minus sign:f(z+h)=f(z)+f´(z)h+Err(f,z,h)hHere the term on the left-hand side is a perturbed value of f, and the terms on the right-hand side describe a way of looking at the effects of the perturbation. The first piece on the right is the "old" value of f, then comes a first-order part, and then comes a part which is higher than first-order. And to me that's what complex differentiability means. (This way of looking at the derivative also makes other things, like verifying the Chain Rule) much easier.

Compatibility conditions

I remarked that linear algebra tries to study collections of linear

equations systematically (a little math joke in that phrase, sorry).

So maybe one studies M equations in N unknowns. There are only a few

rather rough things one can learn in general. For example,

a

homogeneous system of M equations in N unknowns with more unknowns

than equations (N>M) must always have non-trivial (non-zero)

solutions.

And here's another:

If you have more equations than unknowns (M>N), in general the

equations can't be solved: they don't have solutions unless

certain additional conditions ("compatibility conditions") are

satisfied.

For example,

2x+3y=A x+2y=B 5x+4y=Chas no solution unless C+7B-6A=0 (I think this is correct but I make lots of mistakes with linear algebra.)

And now back to complex analysis ...

Last time we tried to solve

vx=-2y vy=4x3and couldn't get a solution. Well, we (sort of) have two linear equations in v, and maybe there should be a compatibility condition. There should be, and here it is: the derivative of v with respect to x and then with respect to y should equal the derivative of v with respect to y and then with respect to x. Certainly there are some very weird functions where this doesn't happen, but polynomials are nice. I quote:

Fact from calc 3 If a function has is continuous second partial derivatives, then its mixed second partial derivatives must be equal. The proof uses the Mean Value Theorem.

So look at the Cauchy-Riemann equations:

ux=vy

uy=-vx

Differentiate the first with respect to x and the second with respect

to y. We get

uxx=vyx

uyy=-vxy

Since vyx=vxy I know that

uxx=-uyy, usually written

uxx+uyy=0

This is Laplace's equation, and the stuff on the left-hand

side is called the Laplacian of u. Solutions of Laplace's

equation are called harmonic functions, and harmonic

functions come up all over the place. Notice, please, that

x4+y2 is not harmonic, so it cannot be

the real part of a complex differentiable function.

Harmonic; conjugate harmonic

In fact, if f(z) is complex differentiable, with f(z)=u(x,y)+iv(x,y),

then both u(x,y) and v(x,y) are harmonic functions. v(x,y) is called

a harmonic conjugate of u(x,y). It is interesting to realize

that a "random" polynomial in x and y is very unlikely to be

harmonic. In fact, a goal of the course is to justify interest in

these rather rare (?) objects.

The converse to CR

Suppose that u(x,y) and v(x,y) have continuous first partial

derivatives. Further suppose that the Cauchy-Riemann equations are

correct at a point (x,y):

ux=vy

uy=-vx

Then f(z)=u(x,y)+iv(x,y) is complex differentiable at z=x+iy.

I actually attempted to verify this. I know another fact from calc

3. I h=a+ib (think of a and b as very small):

u(x+a,y+b)=u(x,y)+ux(x,y)a+uy(x,y)b+Err1(u,x,y,a,b)(|a|+|b|)

v(x+a,y+b)=v(x,y)+vx(x,y)a+vy(x,y)b+Err2(v,x,y,a,b)(|a|+|b|)

and Err1(u,x,y,a,b) and Err2(v,x,y,a,b) both

-->0 as a and b both -->0.

Now let's look at f(z+h)=u(x+a,y+b)+iv(x+a,y+b). This is

[u(x,y)+ux(x,y)a+uy(x,y)b+Err1(u,x,y,a,b)(|a|+|b|)]+i[v(x,y)+vx(x,y)a+vy(x,y)b+Err2(v,x,y,a,b)(|a|+|b|)]

Let us reorganize this stuff:

u(x,y)+iv(x,y) (this is f(z))

{ux(x,y)a+uy(x,y)b}+i{vx(x,y)a+vy(x,y)b}

Now if A=ux=vy and

B=uy=-vx, this becomes

{Aa+Bb}+i{-Ba+Ab} and we can recognize this as

(A-iB)·(a+ib). If we think about this, then f´(z) should be

A-iB and a+ib is h, so we have f´(z)h.

What's left over? This:

{Err1(u,x,y,a,b)(|a|+|b|)}+i{Err2(v,x,y,a,b)(|a|+|b|)}

I would like this to be Err(f,z,h)h. Remember that all the Err terms

go to 0 as a+ib-->0. What's left to consider? Well,

|h|=sqrt(a2+b2) compared to |a|+|b|. So I'll

compare them.

Inequalities

Surely sqrt(a2+b2)<=|a|+|b| because if you

square both sides, you get

a2+b2<=|a|2+2|a| |b|+|b|2. Now cancel

a2 with |a|2

and

b2 with |b|2 and we get

0<=2|a| |b| which is certainly true.

Since sqrt(a2+b2)<=|a|+|b|, I know that if |a|+|b|-->0, then |a+ib|-->0. What about the converse?

Look at |a|+|b|<=2sqrt(a2+b2). I think I

tried 2 in class. Now square and see if we can clarify things:

|a|2+2|a| |b|+|b|2<=4(a2+b2).

We can cancel some terms, and get

2|a| |b|<=3(a2+b2).

But 2|a| |b|<=a2+b2 is true, because

0<=a2-2|a| |b|+b2=(|a|-|b|)2

is certainly true.

Since |a|+|b|<=2sqrt(a2+b2) I now know that if |h|=|a+ib|-->0, then |a|+|b|-->0. Wow! We are done.

What about |z|2?

If f(z)=|z|2=x2+y2, at which points

is f(z) complex differentiable? I just need to check the

Cauchy-Riemann equations when u(x,y)=x2+y2

and v(x,y)=0. Then:

ux=vy becomes 2x=0.

uy=-vx becomes 2y=0.

So |z|2 is complex differentiable only at the point z=0.

More vocabulary: analyticity (holomorphicity?)

It turns out that complex differentiability is not quite the correct

definition. Here's the correct definition:

A function f(z) defined on a connected open set is

(complex) analytic if f(z) is complex differentiable at every point

of the set.

This definition allows a neat theory. I remark that the word

holomorphic is used instead of analytic sometimes.

f´=0

Here's a first fact: if f(z) is analytic and if f´(z)=0 for all z,

then f(z) is constant.

Notice that if the domain of f(z) is not a connected open set, then this result is false, because you could have f(z) equal to one constant in one "blob" and another constant in another "blob".

Well, if f´(z)=0 for all z, then then Cauchy-Riemann equations,

combined with the observation that

f´(z)=ux+ivx show that ux=0

and uy=0 and vx=0 and vy=0 for all x

and y. This means that u and v must be constant on horizontal lines

(because of the x derivatives) and on vertical lines (because of the y

derivatives). But in a connected open set, any two points can be

connected, not only by line segments, but also by line segments which

are made up entirely of horizontal and vertical line segments. So the

values at any two points must be equal because of the previous

observations about vertical and horizontal lines.

Well, if f´(z)=0 for all z, then then Cauchy-Riemann equations,

combined with the observation that

f´(z)=ux+ivx show that ux=0

and uy=0 and vx=0 and vy=0 for all x

and y. This means that u and v must be constant on horizontal lines

(because of the x derivatives) and on vertical lines (because of the y

derivatives). But in a connected open set, any two points can be

connected, not only by line segments, but also by line segments which

are made up entirely of horizontal and vertical line segments. So the

values at any two points must be equal because of the previous

observations about vertical and horizontal lines.

Other conditions

If f(z) is analytic and has only real values, then f(z) is

constant.

Why? Because then v is 0, and so vx=0 always

and vy=0 always, then the Cauchy-Riemann equations

tell you that f´(z) is 0 always. So f(z) must be constant.

If f(z) is analytic and has only imaginary values, then f(z) is

constant.

Why? Interchange u and v in what we just did.

If f(z)=u(x,y)+iv(x,y) where u and v are real, and if

u2+v2 is constant, then f(z) is constant.

Look at the clever proof on p.82 of the text. Here is another proof,

not so clever: if u2+v2=C, then d/dx this

equation. The result is

2u ux+2v vx=0.

Of course we can do the same thing with d/dy:

2u uy+2v vy=0.

Now use the Cauchy-Riemann equations. This will get us:

2u ux-2v uy=0.

2u uy+2v ux=0.

Look closely at this system of equations:

(2u -2v) ( ux ) (0) ( ) ( ) = ( ) (2v 2u) (0)This is a system of two homogeneous linear equations in the two "unknowns", ux and uy. From linear algebra we know that such a system always has the trivial solution (where the two unknowns are 0). This would be fine, because then we could conclude that u is constant, and then (CR) v is constant. But does the system ever have non-trivial solutions? Yes, when the determinant of the coefficient matrix is non-zero. Here that determinant is 4u2+4b2. This quantity we are assuming is constant. Hey, if it is the zero constant, then both u and v must be 0, so f is constant. If it is not the zero constant, then linear algebra tells us that ux=0 and uy=0. Therefore, as before, u is constant and so must v be.

Why? Geometry for the future

The previous results are actually reflections of the following truth:

if an analytic function is not a constant function, then the image of

the domain of the analytic function

will always be an open subset of C. This is not obvious, and nothing

like it is true in elementary real analysis.

Exam warning!

I'll give an exam a week from Tuesday. The exam day is therefore

Tuesday, March 1.

HOMEWORK

The definition of complex differentiability

Examples

Exp

Counterexample

A question

The Cauchy-Riemann equations

From the real part

HOMEWORK

Please begin to read section 2.2 (sorry, Mr. Cohen, I am skipping

2.1.1).

2.1: 23

In class I exhibited a function which was complex differentiable only

at 0. Can you find a function defined on all of C which is complex

differentiable only at 0 and 1?

2.2: 2, 3, 9, 11, 13, 14, 17

Tuesday, February 15

(Lecture #9)

Here we take advantage of the fact that C is a field and just copy

the definition from R. The limit of the difference quotient, if it

exists, is again called the derivative.

zn is complex differentiable everywhere (it is

entire). Constants are entire. Sums, products, and quotients

(this where defined) of appropriate functions are complex

differentiable. The chain rule holds. (I recommend that you try to

verify the chain rule.)

I attempted to, and finally succeeded in, with students' valiant help,

verifying that the exponential function was complex

differentiable. This was conceived as a lesson, not just of the

subject matter, but also of the difficulty of reading a math

textbook. There is a need for intensity and engagement, a need for

active participation. That is what I hope students got from this

episode.

The function f(z)=Conjugate(z)=x-iy is not complex

differentiable at any point! This we showed by first taking h=a+ib

and making a=0 and b-->0, and then making b=0 and a-->0 in the

definition. The results should be the same, since these difference

quotients should approach the same limit. But the results are two

distinct constants, and never equal.

I didn't raise this is class, but now a "sophisticate" might ask a

question: is the definition correct? Here I use the word in a

uncommon way which I will explain. If nice functions (and Conjugate(z)

is fairly nice: it is linear) don't satisfy the definition, maybe the

definition is too strict for use. We'd like to have a rich theory,

covering many examples with many applications. Well, it turns out that

there will be many examples and applications, although that is not

clear now. And Conjugate(z) is real linear, but it is not

complex linear, so we shouldn't worry too much about it.

Suppose f(z)=f(x+iy)=u(x,y)+iv(x,y), where u(x,y) and v(x,y) are

real differentiable functions. If f(z) is complex differentiable, then

both first partial derivatives of u(x,y) and v(x,y) exist, and

ux=vy

uy=-vx

This pair of equations is called the Cauchy-Riemann equations. We

proved this by imitating our two limits which we used to investigate

the function Conjugate(z).

I suggested that we try the following exercise: if u(x,y)=3y+7xy, then

find a corresponding v(x,y) and see if we can write a corresponding

f(z) in terms of z=x+iy. I suggested that we try the following line

of investigation:

We did exactly this, and we were successful. Let me try to show you:

Another example?

v(x,y)=-3x-(7/2)x2+STUFF1(y).

Integrating the second equation:

v(x,y)=(7/2)y2+STUFF1(x).

Now we compare the two descriptions of v(x,y), and learn that

v(x,y)=-3x-(7/2)x2+(7/2)y2+possible constant.

The various STUFF's of course conceal parts of each

description. This process was studied in calculus and also in some

aspects of differential equations. It reconstructs a function from its

gradient. Here I'll set the possible constant to 0, but warn

that at some times this is not appropriate.

This was vicious. I suggested we try another polynmial for u(x,y):

just x4+y2. We followed the same outline, but

ran into some difficulty in step 3. What's happening?

Please begin reading Chapter 2, and do the previously

assigned problems. Some of them are difficult.

Thursday, February 10

(Lecture #8)

Here are some facts we need to know about line integrals. |

| The definition |

|---|

| Independence of parameterization |

|---|

| Linearity in the integrand, u(z). |

|---|

| "Linearity" (as much as you'll let me!) in the curve, C. |

|---|

| Estimating the integral, with weak and strong justification. |

|---|

Let's compute ![]() 01t2+2ti dt. (Why do I

need to be so careful? Wait, wait.) I can antidifferentiate

polynomials. This works out to

(1/3)+i. The modulus of this complex number answer is

sqrt(10)/3, which is about 1.054092553.

Therefore the modulus of the integral is about 1.054092553.

01t2+2ti dt. (Why do I

need to be so careful? Wait, wait.) I can antidifferentiate

polynomials. This works out to

(1/3)+i. The modulus of this complex number answer is

sqrt(10)/3, which is about 1.054092553.

Therefore the modulus of the integral is about 1.054092553.

Much of mathematics is not very subtle. A great deal consists of trying to find out if changing the order of doing things changes the answer. Here the "things" are integration and taking modulus. Onward!

The integrand is t2+2ti, and its modulus is sqrt(t4+4t2) which is t sqrt(t2+4). Hah! The alert Calc 1 student will now see that this is a function whose antiderivative can be easily written: it is (1/3)[t2+4]3/2. So I can compute the integral of the modulus of this function exactly, and the answer is (5/3)sqrt(5)-(8/3), A numerical approximation of this is 1.060113297.

These computations have been facilitated by my friend, Maple.

What have I done? I have computed ![]() 01|g(t)| dt and |

01|g(t)| dt and |![]() 01g(t) dt|. The first is approximately 1.060113297 and the

second is approximately 1.054092553.

01g(t) dt|. The first is approximately 1.060113297 and the

second is approximately 1.054092553.

Now let me give you a heuristic reason for a more general assertion. Of course, heuristic means

adj. 1. allowing or assisting to discover. 2. [Computing] proceeding to a solution by trial and error.

Suppose g(t) is a complex-valued function defined for t's in the

interval [a,b]. Now ![]() abg(t) dt is, to me, really a

BIG (Riemann) sum:

SUMj=1Ng(tj)(tj-tj-1).

How large can this be? Since the complex numbers can't be

ordered, I use modulus instead, and ask how large can |SUMj=1Ng(tj)(tj-tj-1)| be?

The Triangle Inequality applies here, and an overestimate is

SUMj=1N|g(tj)|(tj-tj-1).

I don't need the

| | around (tj-tj-1)

because (tj-tj-1) is a positive real number.

But

SUMj=1N|g(tj)|(tj-tj-1) is a Riemann sum for

another integral:

abg(t) dt is, to me, really a

BIG (Riemann) sum:

SUMj=1Ng(tj)(tj-tj-1).

How large can this be? Since the complex numbers can't be

ordered, I use modulus instead, and ask how large can |SUMj=1Ng(tj)(tj-tj-1)| be?

The Triangle Inequality applies here, and an overestimate is

SUMj=1N|g(tj)|(tj-tj-1).

I don't need the

| | around (tj-tj-1)

because (tj-tj-1) is a positive real number.

But

SUMj=1N|g(tj)|(tj-tj-1) is a Riemann sum for

another integral:

![]() ab|g(t)| dt. All of this leads me

to believe that

ab|g(t)| dt. All of this leads me

to believe that

|![]() abg(t) dt|<=

abg(t) dt|<=![]() ab|g(t)| dt.

ab|g(t)| dt.

This inequality is correct. We have seen one numerical agreement. The discussion using Riemann sums is quite difficult to convert into a proof. There is a very nice, rather tricky, device to prove this result on p.61 of the text, which you might want to look at.

| The ML inequality |

|---|

This inequality will be used about 370 times by the instructor and by students in the course. Let me show you some examples, which will be silly because they are out of context.

ML example #1

I considered the curve SR, which I drew on the board. It

was a semicircle of radius R in the upper halfplane, centered at the

origin. The integrand was 1/z2. I wanted to investigate the

asymptotics of ![]() SRdz as R-->infinity. In this

case the integral can be evaluated exactly, but I wanted ot use the ML

inequality. So:

SRdz as R-->infinity. In this

case the integral can be evaluated exactly, but I wanted ot use the ML

inequality. So:

|![]() SRdz|<=ML. Here L is half the circumference of a circle

of radius R, and that's (Pi)R. What about M? Well, on the curve,

|z|=R. so |1/z2|=1/R2. Therefore the modulus of the

integral is at most (Pi)R(1/R2). The R2 on the

"bottom" dominates as R-->infinity, so certainly this integral

must-->0.

SRdz|<=ML. Here L is half the circumference of a circle

of radius R, and that's (Pi)R. What about M? Well, on the curve,

|z|=R. so |1/z2|=1/R2. Therefore the modulus of the

integral is at most (Pi)R(1/R2). The R2 on the

"bottom" dominates as R-->infinity, so certainly this integral

must-->0.

ML example #2

I used the same SR, but now I wanted a more

interesting u(z). I think I tried something like

u(z)=1/[z4+5z+4]. The exact numbers don't matter: what does

matter is the estimation. Again, to understand the asymptotic behavior

as R-->infinity, I used ML, and L was PiR as before. Since u(z) is

a bit more complicated, I had to use the techniques introduced in

Lecture #2 to estimate it. We did some examples

then which you may review.

Suppose |z|>10 (mostly picked at random). Then |z4+5z+4|>=|z|4-|5z+4|. But how large can |5z+4| be? Well, on SR where |z|=R>10, I know that |5z+4|<=5|z|+4<=2|z|2. But 2<=(1/2)|z|2 for these z's, and therefore |5z+4|<=(1/2)|z|4, and |z4+5z+4|>=|z|4-(1/2)|z|4=(1/2)|z|4. There is a great deal of sloppiness and waste in here: the inequalities are likely to be very far from equalities but I just want to get an answer.

Now I know for z on SR, |u(z)|<=1/[(1/2)|z|4] so that I can take 2/R4 as my M. The modulus of the integral is therefore bounded by (Pi)R[2/R4] and this surely -->0 as R-->infinity. In this case, I don't it is at all possible to compute exact values of the integral, but the estimation is very easy.

| Green's Theorem |

|---|

| |||||

z=t x=Re(z)=t dz=dt |

z=it+1 dz=i dt x=Re(z)=1 |

z=-t+1+i dz=-dt x=Re(z)=-t+1 |

z=i-it dz=-i dt x=Re(z)=0 | ||

Therefore the integral is 1/2+i/2-1/2+0=i/2. This isn't 0: what a disappointment. Sometimes people misremember Green's Theorem and it might sound like the line integral over almost any closed curve of almost anything is 0. That is far from true.

A sort of statement of Green's Theorem

Well, let me quote the result in the text:

![]() Gammaf(z) dz=i

Gammaf(z) dz=i![]()

![]() Omega[fx+ify]/2 dxdy.

Omega[fx+ify]/2 dxdy.

Here Gamma is the whole boundary curve of a region Omega, oriented so

that the region is the left of the curve Gamma as we more along Gamma.

This is a Green's Theorem changed so that we can use it in complex

analysis. The fx and fy are the partial

derivatives with respect to x and y. We can check it for the case we

just computed. The double integral's integrand,

[fx+ify]/2, is 1/2, and the area of the square

is 1, and the i out in front makes everything come out

correctly.

A sort of proof

If we wanted to check more generally (whisper the word, proof)

then ... well, since both sides are linear we could start with looking

at i![]()

![]() Omega[fx]/2 dxdy. We can

use the Fundamental Theorem of Calculus to convert the partial with

respect to x into f(1,y)-f(0,y) because the integral over the unit

square has both x and y going from 0 to 1. Then the double integral

becomes

i

Omega[fx]/2 dxdy. We can

use the Fundamental Theorem of Calculus to convert the partial with

respect to x into f(1,y)-f(0,y) because the integral over the unit

square has both x and y going from 0 to 1. Then the double integral

becomes

i![]() y=0y=1[f(1,y)-f(0,y)]/2 dy. This then

breaks up (and I'll change y to t):

y=0y=1[f(1,y)-f(0,y)]/2 dy. This then

breaks up (and I'll change y to t):

![]() t=0t=1f(1,t) i dt-

t=0t=1f(1,t) i dt-![]() t=0t=1f(0,t) i dt.

The first of these integrals is the integral of f(z) over the

right-hand side of the boundary of the unit square, going up, and the

second is the integral of f(z) over the left-hand side of the unit

square, going down. The other two sides are gotten from the

fy term in a similar manner.

t=0t=1f(0,t) i dt.

The first of these integrals is the integral of f(z) over the

right-hand side of the boundary of the unit square, going up, and the

second is the integral of f(z) over the left-hand side of the unit

square, going down. The other two sides are gotten from the

fy term in a similar manner.

Now look at the important integral ...

We previous computed the most important line

integral. Let's try it again. Here f(z)=1/z=1/(x+iy), and the

region, Omega, is the circle of radius R centered at the origin. The

corresponding double integral has as integrand

[fx+ify]/2. I'll use the Chain Rule and get

fx(z)=-[1/z2]1 and

fy(z)=-[1/z2]i.

Therefore the integrand [fx+ify]/2=

-[1/z2]1+i-[1/z2]i=0 if we recognize that

i2 is -1. So use of Green's Theorem in this most important

example yields 0. This is distressing since we previously computed it

and got 2(Pi)i. What is wrong?

The use of Green's Theorem here is not valid.

Better state Green's Theorem more precisely

The functions in Green's Theorem need to be differentiable ("smooth")

at every point on and inside the curve. Even one "lousy" point

(a singularity) makes it possible for the theorem to be not

correct. This is subtle, and you should reinforce it by thinking,

again and again, that 2(Pi)i is not the same as 0.

Now look at what "inside" means using a weird computation

Now look at what "inside" means using a weird computation

Let's try to compute ![]() B(1/z) dz. Here B is the

orinted boundary of the square whose sides are parallel to the

coordinate axes, centered at 0, and whose side length is 4. A direct

computation by parameterization of this integration is (barely)

possible. I've done it and it is very tedious and very unpleasant. We

can be, instead, a bit devious, and compute the integral quite

easily.

B(1/z) dz. Here B is the

orinted boundary of the square whose sides are parallel to the

coordinate axes, centered at 0, and whose side length is 4. A direct

computation by parameterization of this integration is (barely)

possible. I've done it and it is very tedious and very unpleasant. We

can be, instead, a bit devious, and compute the integral quite

easily.

As indicated in the picture, the curve A will be the positively

orineted unit circle with center 0. The region R is the subset of C

between these curves. Notice that the correctly oriented boundary of R

is B-A. The minus sign is very necessary. We can apply

Green's Theorem to this R with 1/z, because the only singularity, 0,

is outside of R and A and B. But we saw that the integrand of the

double integral for this function is 0 before (when we computed

[fx+ify] for f(z)=1/z. Therefore the double

integral term in Green's Theorem is 0. What's the other side? It is

![]() B-A1/z dz. Thus

B-A1/z dz. Thus ![]() B-A1/z dz=0, or

B-A1/z dz=0, or

![]() B1/z dz-

B1/z dz-![]() A1/z dz=0 or

A1/z dz=0 or

![]() B1/z dz=

B1/z dz=![]() A1/z dz. But the A

integral is 2(Pi)i, and therefore the B integral is 2(Pi)i.

This is quite tricky, and I will essentially use ideas like this

frequently in the remainder of the course.

A1/z dz. But the A

integral is 2(Pi)i, and therefore the B integral is 2(Pi)i.

This is quite tricky, and I will essentially use ideas like this

frequently in the remainder of the course.

What's inside?

The logic of the preceding computation can be generalized, so that if

C is a closed curve (oriented positively) and p is a point not on C,

the ![]() C[1/(z-p)] dz is either 0 or 2(Pi)i. In the

first case, p is outside of C and in the second case, p is inside

C. Maybe this is how to figure out what inside and outside are!

C[1/(z-p)] dz is either 0 or 2(Pi)i. In the

first case, p is outside of C and in the second case, p is inside

C. Maybe this is how to figure out what inside and outside are!

|

| The mechanism of the Mad Count |

|---|

Four of the wonderful students in the class volunteered (always better than the truth, which is closer to were dragooned) to draw some pictures with me. I only show the results of three of the students. I set up the exponential function mapping from a domain copy of C to a range copy of C. Then I stood at the range copy, with my chalk at w=1, which I also called HOME. I asked each of the students, each with their individually colored chalk, to choose a value of log(1). Since this is a complex analysis course, there were certainly enough distinct values to choose from. Each student could have their own value of log(1). In my head (sometimes small, sometimes large, frequently empty) I saw a sort of mechanical linkage reaching backwards from my 1 to their choices of log(1). The linkage would "automatically" respond to any changes of position in my location by moving to nearby places whose value of exp would be where my chalk moved to. Sigh. There's no pathology in this course: things will be continuous, things will move nicely, etc. Much more subtle problems will be met.

|

The first trip Then I carefully and slowly (well, almost) moved my chalk from HOME in a simple closed curve. The curve was made up of circular arcs centered at the origin and pieces of rays which started at the origin. I used these curves because last time we had previously computed how vertical and horizontal lines in the domain were mapped. The inverse images of my "trip" from HOME to HOME were carefully drawn. They were congruent, because exp is 2Pii periodic. Each started from each student's choice of log(1) and returned to that choice. |

|

|

The second trip Well, now I left HOME and traveled in a circle around 0, and returned to HOME. The students attempted to imitate the Mad Count's device, and there was some controversy. If we tried to make everything continuous, then, even though my path in the range was a simple closed curve, each student draw a path going from one choice of log(1) to another choice of log(1). This is very, very mysterious. I drew a few more paths in the range and mentioned what would happen in the domain, and said we would need to explain all this is great detail later. This is one of my goals in the course. |

|

Right now, no sine, no cosine!

Section 1.5 of the text discusses sine and cosine. I will save these

functions until later. I want to get into the "core" of the course

(and the subject) as soon as possible.

Vocabulary about curves

This stuff, which must be understood by 403 students, should have been

covered in any calc 3 course, and is discussed in section 1.6 of the

text.

| Phrase | Proper definition | Possible picture |

|---|---|---|

| Curve | A curve is a function whose domain is an interval, [a,b], in R, and whose range is in C. If C(t) is the curve, we could write C(t)=x(t)+iy(t). In this course, these functions will generally be at least continuous. |  |

| Smooth (differentiable) curve | C(t)=x(t)+iy(t) should be differentiable, so that x(t) and y(t) are differentiable. Such a curve has a nice tangent vector at every point. |  |

| Piecewise smooth curve | The curve C(t) is certainly continuous. But additionally, we can divide the interval [a,b] into a finite number of pieces, and in each of these subintervals C(t) is smooth. This will allow us to take care of curves with finite numbers of corners, for example. |  |

| Simple curve | A simple curve is one which does not intersect itself except maybe ... at the ends (see the next plus one definition). So if the domain of C(t) is [a,b], and if a<s<t<b, then C(s) is not equal to C(t), and C(a) is not C(s) and C(b) is not C(s). |  |

| Closed curve | "Head=Tail" -- that is, if the domain of C(t) is {a,b], then C(a)=C(b). |  |

| Simple closed curve | A closed curve which is also simple. Here C(s)=C(t) with s<b implies either s=t or a=s and b=t. |  |

| Adding curves | Suppose C1(t) is a curve with domain [a,b] and C2(t) is a curve with domain [c,d]. If C1(b)=C2(c) (that is, C1's "Head"=C2's "Tail", perhaps) then the curve C(t) whose domain is [a,b+d-c] and defined by C(t)=C1(t) if t<b and C(t)=C2(t-b+c) (I hope!) is called the sum of C1 and C2. We will write C=C1+C2. |  |

| Reversing curves | If C(t) is a curve with domain [a,b], then the "reversal of C(t)", also called -C, will be the curve, let me temporarily call it D(t), whose domain will also be [a,b], defined by D(t)=C(b+a-t). -C is just C backwards. The picture is slightly weird (how should I have drawn it?) but I hope you get the idea. |  |

I hope the darn formulas for C1+C2 and -C(t) are correct. I don't think I will ever be so formal again in this course. I will usually be rather casual. The curves in this course will all be piecewise differentiable, and, actually, they will turn out to be sums of line segments and circular arcs: HAH! to general theory!

Actually I should acknowledge that what we are defining are called parameterized curves. For example, the curves C(t)=t and C(t)=t2 (use [0,1] as domains in both cases) are really different, even though they "look" the same. It turns out that we will mostly be interested in line integrals and a remark below about the values of the integrals explains why we will not care about different parameterizations.

A few examples

|

This curve is a straight line segment starting at -4i and going to

-2i, followed by a quarter circle whose center seems to be 0,

starting at -2i and going to 2. If I wanted to be totally strict, I guess I could define a C(t) on the interval from [0,2+(Pi/2)] Let's see: for t in [0,2), let C(t) be (t-4)i, and for i in [2,2+(Pi/2)], define C(t) to be 2ei(t-2-(Pi/2)). Some remarks Almost always in this course I will parameterize circles and circular arcs using the complex exponential. My first guess will always be CENTER+RADIUSei(stuff). Another remark: what I've just done is excessively precise and maybe slightly silly. I want to write curves in a way that is convenient. So this curve should be written as a sum of two curves, say C1+C2. Here C1(t)=ti where t is in the interval [-4Pi,-2Pi], and C2(t)0+2eit where t is in the interval [-Pi/2,0]. It is just easier to do this than to try to figure out the total length, how to combine and adjust parameterizations, etc. |  |

|

Here I will write the curve again as a sum,

C1+C2. C1 seems to be a semicircle of

radius 2 and center -1. It goes backwards. So

C1(t)=-1+2ei([Pi]-t) where t is in the interval

[0,Pi]. C2 is a straight line segment. This I know how to

parameterize from thinking about

convexity. C2(t)=t(1)+(1-t)(-3i) where t is in the

interval [0,1].

A remark Parameterizing a circular arc in a clockwise fashion feels close to obscene to me. Almost always in this course our circles will be parameterized positively, which will mean counterclockwise. |  |

Line integrals on curves

If C(t) is a differentiable curve and u(z) is continuous in some open

set which includes the image of the parameterized curve C(t), then

![]() Cu(z) dz is

Cu(z) dz is

![]() t=at=bu(z(t))C´(t)dt (here

C´(t)=x´(t)+iy´(t) and u(z(t)) is itself a complex number for each

t).

t=at=bu(z(t))C´(t)dt (here

C´(t)=x´(t)+iy´(t) and u(z(t)) is itself a complex number for each

t).

Parameterized curves and line integrals

Two different parameterizations give the same values.

Example Consider u(z)=z4, and the two curves

C1(t)=t and C2(t)=t2, with both

domains equal [0,1]. Then:

The most important line integral

Suppose C(t) is the circle of radius R>0 and center 0. Suppose

u(z)=1/z (continuous away from 0, of course. We compute

![]() C[1/z] dz. The

circle is {oriented/parameterized} positively: C(t)=Reit

and t is in [0,2Pi]. Then z=Reit so

dz=Reiti dt. The limits on the integral are 0 and

2Pi. The integral itself becomes

C[1/z] dz. The

circle is {oriented/parameterized} positively: C(t)=Reit

and t is in [0,2Pi]. Then z=Reit so

dz=Reiti dt. The limits on the integral are 0 and

2Pi. The integral itself becomes ![]() 02Pi[1/{Reit}]Reiti dt

which is

02Pi[1/{Reit}]Reiti dt

which is ![]() 02Pii dt and has value

2Pi i.

02Pii dt and has value

2Pi i.

The value of this integral and the fact that it is not zero are

related to the exercise with the Mad Count done at the beginning of

this lecture.

What should the exponential function be?

The textbook asserts that exp(z)=exp(x+iy)=excos(y)+iexsin(y).

Standard notation which I will use is that the domain variable

be called z=x+iy and the range be called w=u+iv. Here u(x,y) is

excos(y) and v(x,y)=exsin(y).

Why does the textbook assert that the complex exponential should be

this (initially, at least) weird combination of functions? There are

several ways to motivate the definition. Let me go over a few of

them.

Notice please that this function is made of sums and products of well-known functions which are certainly continuous, and therefore it is nice and continuous, also.

|

The geometry of the exponential function This is what I will concentrate on during this lecture. The complex exponential function has many pleasant and some irritating aspects. It is a bit difficult to draw a true graph of this function. The domain points are in C which is R2, as are the range points. Therefore the graph, the pairs of points (z,ez) would be elements of R4, and I don't know any neat ways of showing four-dimensional graphs. (Many attempts of been made. For example, we could sketch (x,y,u(x,y)) and change this standard graph of a surface in R3 to the graph (x,y,v(x,y)) by some geometric process. Or one could "draw" the graph of u(x,y) and put colors on the graph according to the values of v(x,y). You can easily find these methods and others on the web. I'll stick to more ordinary methods here. I'll have a complex plane representing the domain to the left, and then a range complex plane to the right. I'll try to sketch objects on the left in the domain and then sketch the corresponding objects on the right, objects which "correspond" under the exponential mapping. |

|

|

One horizontal line Consider the horizontal axis. We could image a bug moving on the axis in the domain at uniform velocity, from left to right. Here y=0, and x goes from -infinity to +infinity. The image in the w-plane is excos(0)+iexxsin(0) which is just ex. Notice that this is a ray, a halfline, whose image covers the positive x-axis. The image of uniform motion is no longer uniform motion. Near "-infinity" the image bug moves very slowly, and as the image goes from left to right along the positive x-axis, the image bug moves ever faster, exponentially faster. |

|

|

One vertical line Now consider the vertical imaginary axis, where x=0, and a bug crawling at uniform velocity from -infinity (in y) to +infinity (in y). Now with x=0, the image is e0cos(y)+ie0sin(y). So the image is the unit circle, described in the positive (counterclockwise) fashion, with the circle drawn at uniform velocity (1 radian per timestep). |

|

|

Other horizontal lines If we look for the image of a horizontal line which is just slightly above the x-axis, what will we get? Here we have excos(y)+iexsin(y) with y a small positive number. In fact, in terms of vectors, this is all positive (ex) multiples of the unit vector [cos(y),sin(y)]. So the image is again a halfline, a ray, emanating (originating?) from 0 at angle y to the positive x-axis. You could imagine as the horizontal lines go up or go down, the images will rotate around the origin. |

|

|

Other vertical lines If we look for the image of a vertical line which is to the right of the y-axis, what will we get? Here we have excos(y)+iexsin(y) with x a positive number. In fact, this describes a circle whose center is the origin, and whose radius is ex, always a positive real number. You could imagine as the vertical lines go left or right, the images will all be circles centered at the origin of varying radii. |

|

The algebra

We can try to "solve" ez=w and we will get some

algebra which resembles the real case, and some startling changes.

If excos(y)=u and exsin(y)=v, we will get the

same u+iv if we increase y by 2Pi.

The complex exponential is 2Pii

periodic.

Notice that we will not be able to get w=0. If u=o then either

ex=0, not possible from the real exponential, or

cos(y)=0. But also sin(y) would have to be 0, and since the sum of

their

squares is always 1, they can't be 0 together!

The complex exponential is never

0.

Also, if w is any non-zero complex number, we can solve

ez=w. The modulus of

excos(y)+exsin(y)i is ex, and this

must be |w|, so x=ln(|w|). Also v/u is sin(y)/cos(y) since the

ex's cancel, so y is arctan(v/u). A better way to think

about y is that it must be any value of arg(w). Remember that

the argument of 0 is not defined.

If w is not zero, then z=x+iy, when x=ln(|w|) and

y=arg(w) is a solution of w=ez, and we will write

z=log(w). When

that equation has one solution, it has an infinite number of

solutions, separated by integer multiplies of 2Pii.

I remared that the image of a "tilted" line (not vertical or horizontal) turns out to be an equiangular spiral.

The exponential function takes any horizontal strip of "height" 2Pi and compresses the left infinity "edge" to a point, and spreads out the right edge so that the result covers all of C except for 0.

I then computed what happens to the image a straight line under the exponential mapping. I got another parameterized curve. I establishes that the mapping rotated tangent vectors by a constant amount, the result of multiplying by exp(z). Thus the exponential mapping is "conformal". If this multiplication represents the complex derivative (which it does, as we will see later) then we have established that exp'=exp.

|

Here is the result of the Maple command plot3d(exp(x)*cos(y),x=-2..3,y=0..2*Pi,axes=normal); which will draw the real part of the complex exponential function. Possibly this drawing, a different kind from what I did above, may help you understand the exponential function. |

|

HOMEWORK

Comment The problem is "easy" but irritating. Maple

could help you with part of it. For example, the command

evalf(I^I); gets the response .2078795764 which might be an

approximation to part of one answer above. Note, though, that I'd like

exact answers in terms of traditional mathematical constants and

values of calc 1 functions, not approximations.

1.6: 1, 2, 4, 5

What is a continuous function?

Examples of continuous functions

An example of a function which is not continuous

Several intermediate value theorems, some of which are not

correct

What follows is not in the textbook in exactly this form. It does

appear later in the textbook.

What is a real differentiable function?

What is a complex-differentiable function?

A specific example of a function:

C(z)=(z-i)/(z+i)

Well, if |(z-i)/(z+i)|<1, this is the same as

All of these algebraic steps are reversible. The only one which might

slow you down is the squaring step, but if we know A and B are

non-negative, then A<B is logically equivalent to

A2<B2. The algebra indeed says that C

establishes a 1-1 correspondance between H and D. If we now compute

(and assume all of the differentiation algorithms are still valid,

which indeed is correct) then C´(z)=2i/[(z+i)2]. So

C´(z) is never 0. Indeed, the mapping C is conformal. It takes the

upper halfplane to the unit disc is such a way that all angles between

curves are preserved. This is not supposed to be obvious. In conformal

geometry, where measurement is with angles, H and D are the

same. Certainly, if we were concerned with distances between pairs of

points (metric geometry) D and H are not the same. So this is somewhat

remarkable.

HOMEWORK

I reviewed convergence of an infinite sequence introduced last

time and made a very poor pedagogical decision. I

decided to state the Cauchy Criterion for convergence of a sequence. I

tried to motivate this in several ways. First, the definition of a

convergent series used the limit of the sequence as part of the

defintion. I wanted to show students that there could be an "internal"

condition, only referring to the terms of a sequence itself, which

would be equivalent to convergence. But I ignored my own exprience,

much of it obtained by teaching Math 311. This experience showed that

developing and understanding the Cauchy criterion for convergence

takes most people weeks of struggle (and some students seem

hardly able to understand it even then!). So I talked "around" the

Cauchy Criterion for a while, and then finally stated it:

Any sequence which converges satisfies the Cauchy Criterion, because

the terms, when they are "far out" all get close to the limit of the

sequence, and thus, by the Triaqngle Inequality, they get close to

each other. That the converse is also true is not obvious, as I

mentioned above, and several weeks of discussion in Math 311 are

devoted to this converse.

The reason for the digression which was the mention of the Cauchy

Criterion has to do with convergence of series. I defined the partial

sum of a series, and as usual defined the convergence of an infinite

series to be the limit of the sequence of partial sums, if such a

limit existed. Then I remarked that a (non-zero!) geometric series

would converge if the (constant) ration between successive terms had

modulus less than 1. I could even then write a formula for the sum of

such a series.

Most series do not have constant ratios between successive terms, and

are not geometric series. The simplest way to test them for

convergence is to use absolute convergence. A series converges

absolutely if the series consisting of the moduli of the original

series converges. This fact uses the Cauchy Criterion, and I should

have followed the textbook, which cleverly sidesteps the Cuachy

Criterion by mentioning that a complex series converges if and only if

the real and imaginary parts converge, and then uses the real form of

the comparison test to develop the result just quoted. This would have

been much better!

A function f is continuous if f takes convergent sequences to

convergent sequences.

We're almost at complex analysis. I'll get a version of the

Intermediate Value Theorem next time, and then start differentiating.

A general discussion of the latter two kinds of "numbers" is available

in the quite recent book, On

Quaternions and Octonions by Conway and Smith. Quaternions

definitely have applications in physics and geometry. A book covering

the whole subject of number systems, and whose early chapters are

quite understandable, is Numbers

by Ebbinghaus etc.

Why don't we have courses about the quaternions and the octonions? It

turns out that as the dimensions increase, some nice property is

lost. For example, going from the complex numbers to the quaternions:

commutativity is no longer necessarily true. And from the quaternions

to the octonions, in general, multiplication is no longer necessarily

associative. One natural question for students in a complex analysis

course might be, "What's lost going from R to C?"

Math 311 discusses the theory of the real numbers, and establishes

that R is a complete ordered field. Here order is used

in a technical sense: we can tell when one number is larger

than another. The ordering has the following desirable properties:

Pedagogical consequence If you write any statement which

directly connects "<" and complex numbers, I can stop reading or

paying attention. Your reasoning is incorrect.

So what one gives up going from R to C is ordering. But

this is a course which will do lots and lots of estimation. We will

need to discuss continuity and convergence, for example. Even if we

aren't totally formal and rigorous, we will need to estimate

sizes. The words BIG and small will be used constantly. How do we know what they

mean?

I remark that rigor is defined as

A measure of the size of a complex number z=a+bi will be its

modulus. We already know what happens to modulus when we

multiply:|z| |w|=|zw|. But what about addition? How can we

relate |z| and |w| and |z+w|? Well, since |z| is the absolute value of

z if z is real, we know that the sum |z|+|w| is rarely equal to |z+w|

(hey: z=3 and w=-3). But the most powerful "tool" dealing with modulus

is the Triangle Inquality.

But there is more that we can do. If I know that |z|=30 and |w|=5,

then ... well, if z describes my position in the plane relative to the

origin (a lamppost?), and I am walking my dog, whose position relative

to mine is w, then z+w might describe the position of the dog compared

to the lamppost. Certainly the dog can't get farther away than 35

units. But how close can it get? Well, consider the following:

Mr. Wolf in class and

Mr. Bittner after class expressed some

doubts about how well these methods might work with some other

examples (Shouldn't we worry about division by 0? What happens if we

encounter a situation where the BIG part is not obvious, or maybe

there isn't a BIG part?). Their misgivings are indeed correct. My

random (random?) examples were actually chosen fairly carefully

so that such problems didn't occur.

We need to discuss the notion of interior point, interior of a set, an

open set, boundary point, and closed set. Also convex set, and

connected open set. This is all in 1.3.

The Euclidean plane can be thought of as the playground for two

diemnsional vectors (forces, velocities, etc.). It can also be

regarded as a two-dimensional real vector space, R<2. Or we

can think of it as C, the complex numbers. The vector i

(1,0) could be 1 and the vector j (0,1) could be i. I ask only

that i2=-1.

If all of the routine rules of computation (distributivity,

commutativity, associativity, etc.) are satisfied, then for

example:

In fact, it is true that C, numbers written a+bi

with a and b real numbers, are a field when addition and

multiplication are defined appropriately. Verification of this is

tedious and maybe not really interesting.

I asked how the multiplicative inverse of a number like 3+2i would be

found. So we need a+bi so that (3+2i)x(a+bi)=1=1+0i. This means

that

Vocabulary

If z=a+bi, then a is the real part of z, Re(z), and b is the imaginary

part of z, Im(z). The number sqrt(a2+b2) is

called the modulus of z, and written |z|. The number a-bi is called

the complex conjugate of z, and written overbar(z) (z with a bar over

it).

I then discussed polar representation of complex numbers. We found

that z=|z|(cos(theta)+i sin(theta)). Here theta was an angle

defined only up to a multiple of 2Pi ("mod 2Pi"). Thus 1+i is

sqrt(2)[cos(Pi/4)+i sin(Pi/4)], for example.

I then computed the product of two complex numbers written in their

polar representation. I found that:

I wrote De Moivre's Theorem, which states that if

z=r[cos(theta)+i sin(theta)]

then zn must be

rn[cos(n theta)+i sin(n theta)].

As a consequence I attempted to "solve" z7=1 (the solutions

are a regular heptagon [seven-sided] inscribed in a unit circle

centered at 0 with one vertex at 1). We discussed how many solutions

such an equation could have (at most 7). Then I tried to "solve"

(describe or sketch the solutions) of z3=1+i (a equilateral

triangle of numbers).

HOMEWORK

Maintained by

greenfie@math.rutgers.edu and last modified 1/18/2005.

Please read carefully Chapter 1 of the text.

Please hand in these

problems on Thursday, February 10:

1.5: 4, 8, 9, 19

Also please answer the following question:

One definition of ab is exp(b

log a) (in this course we will use the complex analysis

meanings of exp and log!). Use this definition to find all possible

values of ii, 22,

i2, and 2i.Tuesday, February 1

(Lecture #5)

Suppose f:D-->C. We'll call f continuous if for every sequence

{zn} which converges to w (and all the zn's and

w are in D) then the sequence {f(zn} converges to f(w).

Polynomials are continuous. Rational functions, in their implied

domains (where the functions "make sense" are continuous.

Here I looked at Q(z)=z/conjugate(z). This function is bounded. The

modulus of z and the conjugate of z are the same, so |Q(z)|=1

always. What happens as z-->0? In class I tried to look at

the real and imaginary parts of Q(z). I did this in the following

way:

x+iy (x+iy)(x+iy) (x2-y2) 2xy

------ = ------------- = -------- + i ------

x-iy (x-iy)(x+iy) (x2+y2) (x2+y2)

The real and imaginary parts are commonly used examples in calc 3.

On the real and imaginary axes away from the origin these functions

have different values. For example, the real part is

{x2-y2]/[(x2+y2)]. When

y=0 this is 1 and when x=0 this is -1. So there is no unique limiting

value as (x,y)-->{0,0).

In complex notation the modulus of Q(z)=z/conjugate(z) is 1, and the

argument is twice the argument of z. In fact,

Q(z)=cos(2arg(z))+i sin(2arg(z)).

So I will try to duplicate the wonderful dynamic (?) atmosphere of the

classroom (clashroom?) as the instructor challenged the students to

find a valid intermediate value theorem (IVT).

False Take D to be the union of two disjoint open discs, say

D is A, a disc of radius 1 centered at 3i, union with B, a disc of

radious 1 centered at 7. Then define f to be -7 on A and 38 on B. f is

continuous, because convergent sequences in D must ultimately end up

either in A or in B. But f is never 0. This version of the IVT is

false because the domain is "defective".

False Here the catch is that I made f complex-valued and

the complex numbers are sort of two-dimensional and the image of f

could go "around" 0. Here's an example which is a bit nicer than the

one I offered in class. Suppose D is the open right halfplane, those

z's for which Re(z)>0. Take f(z) to be defined by

f(z)=z3. Certainly the only complex number for which

z3=0 is z=0. But let's see: f(1)=1, so f has a positive

value on this D. And f(1+sqrt(3)i)=(1+sqrt(3)i)3. I chose

this z (1+sqrt(3)i) exactly because its argument is Pi/3. Now when I

cube the number, the argument become 3·Pi/3=Pi so that the

result is negative. The modulus of this z is 2 and its cube is 8 so

the exact result is -8. So here the function has both positive and

negative values, and is never 0.

True We will get much finer root-finding results in this

course, but this one does work. How could we prove this? Well, create

a polygonal path from p to q inside D. For example, we could start by

thinking of a line segment inside D connecting p and q. On this line

segment, f is a real-valued continuous function defined on the

intervale parameterizing the line segment, and its values are positive

at one end and negative at the other end. By the standad Calc 1 IVT, f

must have a root somewhere in the segment. What if the polygonal line

segment has two pieces? Well, let's look at the middle vertex. So f

could be 0 there, in which case certainly a root exists. If f is

either positive or negative, we will have a line segment where the

endpoints have different signs. So we can use the previous

argument. In general, I guess we could use an induction argument based

on the number of line segments in the connecting polygonal path.

This is a function f so that the limit as h-->0 of [f(x+h)-f(x)]/h

exists. Untwisting the definition a bit, what this means is

that f(x+h)=f(x)+f´(x)h+Err(?)h, where Err(?)-->0 as h-->0

and Err(?) is a possibly complicated function depending on f and

x and h. The multiplication of Err by h guarantees that the Err(?)h

is a "higher-order" term as h-->0. The local picture depends mostly

on f(x) (the old value) and f´(x)h, a linear approximation. For

example, if f´(x)=3, then locally f just stretches things by a

factor of 3 near x.

Well, I change x to z. And more or less everything else stays the

same. All I have used in the previous analysis was the ability to

add/subtract/multiply/divide. And to learn what big/small were (done

with | |, which is absolute value for R and modulus for C). But

now I can try to think about the following situation:

What if f is complex-differentiable, and I know that

f(7+2i)=3-i and f´(7+2i)=1+i? Then

locally, near 7+2i, f multiplies little perturbations h by the

complex number 1+i. This means that the little perturbation h, a

small complex number (or, equivalently, a small two-dimensional vector

h) is changed to (1+i)·h. This takes the vector and stretches

it by a factor of sqrt(2) (just |1+i|) and rotates it by Pi/2, a

value of arg(1+i). Is this o.k.? This is a rather strict geometric

transformation. In fact, if we took a pair of h's, say h1

and h2, they would both be magnified by sqrt(2) in length

by f, and both be rotated by Pi/4. Hey: the angle between the h's in

the domain and the angle between their images in the range must be the

same! Angles are preserved. Such a mapping is called

conformal. Indeed, I will establish this formally later in the

course, but if f is complex-differentiable, and if the derivative is

not 0, then f is conformal: angles are preserved.

This is a remarkable function and I will try to not explain where it

comes from. The domain I want to fix for C is H, the upper halfplane,

those z's with Im(z)>0. One remarkable fact about this remarkable

function is that if D is the unit disc (center 0, radius 1) then C

maps H 1-1 and onto D. This is not obvious!

|z-i|<|z+i| (the bottom is not 0 for z in H).

We can square: |z-i|2<|z+i|2.

If z=x=iy, then z-i=x+i(y-1) and z+i=x+i(y+1), so the inequality

with the squares is:

x2+(y-1)2<x2+(y+1).

We can "expand" and get: x2+y2-2y+1<x2+y2+2y+1.

Simplify by cancelling to get:-2y<2y.

This is equivalent to 0<y.

Please hand in these

problems on Thursday:

1.4: 1, 2, 15, 19, 36. This is a small assignment. In the future

I hope to give assignments on Thursday to be handed in a week

later.

Reminder Starting on Thursday, February 3, the class will meet

in Hill 423.

A sequence {zn} satisfies the Cauchy Criterion if and only

if for every epsilon>0, there is a positive integer N *usually

depending on epsilon) so that for n and m >=N,

|zn-zm|<epsilon.

Tuesday, January 25

(Lecture #3)

Even more vocabulary, with an introduction to

The Zoo of Sets!

EXAMPLE Look at the closed unit disc and take out the

origin. This set is not open because (as before) 1 is not an interior

point. This set is not closed because its complement consists of these

points: 0 and all z's with |z|>1. 0 is not an interior point of the

complement.

i) Submerged or overwhelmed (who needs all this vocabulary?)

ii) Resentful (why the heck are there all these special words?).

A beginning of possible answers

I will be more precise shortly about the terms we'll use most

often. The vocabulary began to arrive about 1880 and was in place,

mostly, by the 1920's. Now everyone in the whole world who studies

this subject and many others uses these words. In fact, just studying

the implications of what we are introducing here is the introduction to a

whole subject, topology. Also, it turns out that I am being

quite precise (quantifiers, etc.) in what I am writing because the

precision is needed: statements are wrong, examples are irritating,

etc., without some real precision. This will become clearer as we go

forward in the course. The precision will pay off with some amazing

consequences.

Of course my first example was the sequence

zn=in, the sequence of positive powers of i.

This isn't the simplest example, and does show not great judgement. In

fact, I should certainly have mentioned that all real sequences

are examples of complex sequences. The sequence {in} does

not converge (I didn't handle this rigorously, but asked what it might

converge to and tried to discuss the consqeuences), and, in fact, the

sequence {qn} (for fixed q with |q|=1) converges only when

q=1: if qn-->w then surely qn+1-->qw and

qn+1-->w (because you can multiply convergent sequences and

because you can sort of move sequences along). Therefore qw=1, so

(q-1)w=0 and either q=1 or w=0. Now |qn|=|q|n=1,

so we would expect that the modulus of w to also be 1. But then w is

not 0, so q=1. Whew! I built is some cute observations in that

sequence of sentences, so you may want to look at them and discuss

with me things you don't understand.

THIS IS NOT COMPLEX

ANALYSIS SO YOU CAN DISREGARD

IT!

Real

division algebras

This is a slight diversion, which I hope will give students some

perspective. A division algebra is a real vector space which has a

distributive multiplication with a multiplicative identity and

multiplicative inverses. People searched for examples of these

starting soon after the complex numbers became well-known. It turns

out (and good proofs of this were only completed in the

mid-20th century!) that such objects exist in Rn

only for n=1 and 2 and 4 and 8. These examples are

wonderful: the real numbers, the complex numbers (the subject of this

course) the quaternions (a 4-dimensional division algebra), and the

octonions or Cayley numbers (in R8).

All of the familiar rules for manipulating inequalities can be derived

from these properties. In particular, Math 311 verifies that if x is

not zero, then x2 is always positive: that is,

squares are always positive. Therefore, 1=12 is positive.

Hypothetically, suppose we could "order" C. Then look at

i. It isn't 0, so its square must be positive. But that square is -1,

which we already know is not positive since 1 is positive. Therefore

C is not an ordered field, and there is no way, no ordering

obeying the rules above.

while I would like it to mean, here,

the quality of being logically valid

Pathology versus mathematics!

|z+w|2=... (p.12 of the text) ...<=(|z|+|w|)2.

(I got from one side to the other by "straightforward" (!)

manipulation which I frequently get confused about.)

So If I tell you that |z|=30 and |w|=5, then |z+w| will be at most 35.

Trick question How big can |z-w| be?

In fact, |z-w|<=|z|+|-w|, and all we can actually conclude is that

|z-w| is bounded by 35 also! I did not include any information about

the "direction" of the complex numbers! (Notice that

|-w|=|-1| |w|=1|w|=|w|.)

|z|=|z+w-w|<=|z+w|+|-w|=|z+w|+|w| so that |z|-|w|>|z+w|.

We

could call this the Reverse Triangle Inequality. It isn't

really a reverse of the original, but it can sort of act like that. It

will allow us to make some underestimates. It says:

|BIG|-|small|<=|BIG+small|

but we might need to make judicious choices of BIG and small. For

example, if we switched the roles of BIG and small in the example

above, we would get -25<=|z+w|. While this is certainly true

the inequality actually does not have much value as information: the

modulus of every complex number is certainly bigger than -25.

How to do it

Certainly I haven't defined "useful" in this context, and we won't see

the real uses of the estimations for a few weeks. But let me show the

techniques that are usually tried first. They almost always involve

the Triangle Inequality and the Reverse Triangle Inequality. So:

|z5+z+7|<=|z5|+|z|+|7|=|z|5+|z|+7=1+1+7=9.

So we have an over-estimate.

How about the other side? Here 7 seems to be a good candidate for

BIG. Therefore

|7|-|z5+z|<=|z5+z+7|. Now

the largest that |z5+z| can be is 2 (again using the

Triangle Inequality). Therefore the smallest that

|7|-|z5+z| can be is 7-2=5.

The simple estimates are: if |z|=1, then 5<=|z5+z+7|<=9.

How to do it

I changed the modulus of z. The over-estimate part works much the same:

|z5+z+7|<=|z5|+|z|+|7|=|z|5+|z|+7=32+2+7=41.

But for the under-estimate, the BIG term now seems to be

z5. Therefore

|z5|-|z+7|<=|z5+z+7|. Here

|z5|=|z|5=35, and the largest that |z+7| can be

when |z|=2 is 9. Therefore 35-9<=|z5+z+7|.

The simple estimates are: if |z|=2, then

26<=|z5+z+7|<=41.

How to do it

I found over- and under-estimates for the Top and the Bottom

separately.

Top: |7z-1|<=7|z|+|-1|<=7(3)+1=22. Also, |7z|-|-1|<=|7z-1|,

so that 20<=|7z-1|.

Bottom:

|2z2-7|<=2|z2|+|-7|=2|z|2+7=18+7=25.

And |2z2|-|-7|<=|2z2-7| so that

18-7<=|2z2-7|.

Therefore we know the following:

20<=Top<=22

11<=Bottom<=25

What can we conclude about Quotient=Top/Bottom? To over-estimate a

quotient, we must over-estimate the top and under-estimate the

bottom. The result is that Quotient<=22/11. For an under-estimate of

a quotient, we need to under-estimate the top and over-estimate the

bottom. So 20/25<=Quotient.

Tuesday, January 18

(Lecture # 1)

(3+2i)x(5+7i)=2(5)+2(7)i2+[2(5)+7(3)]i=-4+31i.

3a-2b=1

2a+3b=0

which has a unique solution (Cramer's Rule) if and only if (exactly

when!) the determinant of the

coeffients is not zero. Here that determinant is

32+22. Generally, if we looked at a+bi, the

determinant would be a2+b2.

Or we could look at 1/(3+2i) and multiply ("top and bottom") by

3-2i. Things work out nicely.

But the second equation should be

understood "mod 2Pi". The sine and cosine functions only pay attention

to the values of functions up to a sum or difference of integer

multiples of 2Pi. To illustrate this, I looked at 1+i tried to

understand (1+i)7 and (1+i)13.