In addition to addition and scalar multiplication, there is a third "multiplication" operation, a product that makes sense for matrices of certain related shapes. We begin with the case in which one of the matrices is, in fact, a vector.

Def. Let A = [a1a2 . . . an ] be an m x n matrix (here each aj is a column vector of length n), and let v be a column vector of length n. The matrix-vector product Av is the column vector Av = v1 a1 + · · · + vn an.

Examples

Note how we actually compute: the i'th entry of Av is ai 1 v1 + · · · + ai n vn, which is computed from the i'th row of A and the entries in v.

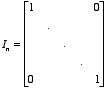

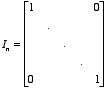

Note that multiplication of any n-vector v by the n x n matrix with entries equal to one on the main diagonal, and zero elsewhere, yields the original vector v again.

Def. The n x n identity matrix In, given by

The multiplication of a vector by a square matrix can be thought of as a

transformation of the given vector into another vector.

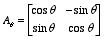

An important family of 2 x 2 matrices corresponds to the transformations

consisting of rotations (counterclockwise) around the origin.

If we think

of the unit horizontal and vertical vectors

![]() , then it is easy to see that

after rotation through the angle q,

this pair of vectors must become the new pair

, then it is easy to see that

after rotation through the angle q,

this pair of vectors must become the new pair

![]() .

Thus we define the rotation matrices:

.

Thus we define the rotation matrices:

Def. The 2 x 2 matrix Aq, given by

,

, As is fairly simple to verify, if u is any vector in R2, then A q u is the vector obtained after rotation of u counterclockwise through the angle q.

Matrix-Vector Product Properties

Let A and B be m x n matrices,

and let u, v be vectors in

Rn.

Then

(a)

If ej

is the j'th standard vector

(eji

= 1 if j = i, and = 0 otherwise),

then Aej

= aj

is the j'th column vector of A.

(b) A(u + v) = A

u + A v.

(c)

A(cu) = c(Au) = (cA)u

for all scalars c.

(d) (A + B)u = Au

+ Bu.

(e)

If Bw= Aw for ALL w in

Rn

, then B = A.

(f)

A 0 = 0.

(g)

Ov = 0.

(h)

Inv

= v.

Note that all of these assertions are straightforward, except perhaps for (e). But ((e) follows from (a) because we can deduce that A and B have the same columns.

We treat x1, . . . , xn as (real) variables, a1, . . ., an as scalars (real constants), and can then consider the linear equation a1 x1 +. . . + an xn = b, for a (real, constant) scalar right hand side. If we consider m such equations simultaneously, we have one of the fundamental problems of linear algebra: finding solutions to such systems.

Def. A system of linear equations is a set of equations of the form

a 11x 1 + · · · + a1 nxn = b1,

. . .

a

m1x1

+. . . + a

m n

xn

=

bm.

Examples; including the general example of 2 equations in 2 unknowns.

How do we solve such systems, systematically? We replace the system by an equivalent one which is easier to solve. Such replacements correspond to the addition of two rows and the multiplication of a row by a nonzero scalar (and combinations of these operations), with the new row replacing one of the corresponding old ones, as well as the interchange of rows for bookkeeping purposes. The resulting systems are equivalent because the corresponding division and subtraction and interchanging would reverse the process.

Example

Now, we introduce a notational shorthand: Why carry around the variables x1, . . ., xn when we do not need to? Let A denote the matrix with entries aij as in the system above, let b denote the column vector with entries b1, . . ., bm, and let x denote the column vector with (variable) entries x1, . . ., xn. The system of linear equations can then be written as Ax = b. If we think of the operations that change this into an equivalent but simpler system, we note that the variable x appears in the same fashion all the time, so that it is really the matrix A and the vector b which we are changing. We form the augmented matrix of the linear system, [A b] (which we will sometimes write as [A | b] to emphasize that the last column has a different role than the others). The operations used to solve the linear systems can now be treated as operations on the augmented matrix, and we do not have to carry the variables along in the notation.

Def.

The following

operations on matrices are called

elementary row operations:

1. Interchange two rows;

2. Multiply a row (that is, all of its entries) by

a nonzero scalar;

3. Add two rows, replacing one of them with the sum.

In order to replace a given system of equations with one that we can easily solve, we note that we try to eliminate variables from the equations until we reach a point where the last variable is determined (or else free), and then back-substitute to find its predecessors.

Def.

A matrix is in row echelon form if

(a) All zero rows (rows consisting of all zeros), if any,

are below all other rows;

(b) For each row, the leading entry (the leftmost

nonzero entry) is to the right of

all higher rows' leading entries;

and

(c) All entries in the column below

any leading entry are zero.

Examples

Note that the corresponding linear system can be easily solved if the augmented matrix is in row-echelon form. It is arithmetically simplest if, in fact, each leading entry is a 1, which can be arranged by scalar multiplication. Moreover, if instead of back-substituting in solving a linear system, we first eliminate all earlier appearances of a given variable that will be determined, we have the simplest equivalent system to the original one. These operations correspond to the following form of the augmented matrix:

Def.

A matrix is in reduced row

echelon form

if, in addition to the conditions (a),

(b), and (c) above,

we have

(d) Each leading entry is a one;

and

(e) all entries in a column above

any leading entry are zero.

Examples

Examples of solving

systems, including "free variables" in the solution.

(Free variables correspond to columns in row echelon form which do not have

a leading entry - leaving aside the the augmentation column

on the right, which does not correspond to any variable).

The columns which do have leading entries (other than the

augmentation column) correspond in the system of

equations to variables which are determined by the values on

the righthand side;

these are called the "basic variables."

Algorithm:

To put a matrix into row echelon form,

1. Find a leftmost nonzero entry, and interchange,

if necessary, to bring it up to the top row.

For calculation purposes, it is easiest to multiply by a scalar, to make

this leading entry be a one.

2. Use that row to obtain zeros in all entries

in the column below the leading

entry, via scalar multiplication and row addition.

3. Repeat the process, working on the submatrix

obtained by ignoring the rows already completed,

working down from top to bottom.

To put the matrix in reduced row

echelon form, first finish the first process, being sure to multiply by

scalars to make each leading entry one. Then, beginning with the

lowest nonzero row:

4.

Use that row to obtain zeros in all entries in the column above that

row's leading entry.

5. Repeat the process,

working on the submatrix obtained by ignoring the rows already

completed, proceeding upward from bottom to top.

For equations Ax = b: form the

augmented smatrix [A | b] and reduce it to an equivalent

matrix in row echelon form. Either back-substitute in the

resulting equations to find the solution(s), or go to reduced

row-echelon form and then read off the corresponding solutions

directly.

Note that there is no solution when

the augmented matrix has a row with leading entry in the far right

column. (That corresponds to an equation of the form

"0 = nonzero number".)

Theorem Every matrix can be transformed into exactly one equivalent matrix in reduced row echelon form, by means of a sequence of elementary row operations.